After considerable effort, I managed to extend the number of Linux distributions supported by TextureMind Framework and all the applications built with it, including DWorkSim and TMD. Initially I thought of supporting a fixed number of distributions, namely CentOS 7, CentOS 8, Rocky 9, Ubuntu 20, Ubuntu 22 and Ubuntu 24. After a week of work, I figured out how to achieve an amazing result: compiling on just one distribution and running the applications on all the others, from glibc 2.17 onwards. Thanks to the framework's design and the minimum external dependencies, I achieved this incredible result. It will simplify a lot the entire build process, because I can deploy everywhere on Linux with just a single build. As an immediate effect of this great feature, from now on it will be possible to download Linux applications from a single package to see it run on any distribution released in the last 10 years or more, from glibc version 2.17 onwards.

Category Archives: Updates

TextureMind Desktop V0.2 (beta)

As I promised, today I released the very first version of TextureMind Desktop. If you don't know what I'm talking about:

TextureMind Desktop (TMD) is a software to allow remote access to a personal computer's desktop (the host machine) from a client device. It is composed by a server running on the host machine and a client application which connects to the server. The application exposes a simple user interface to make the user access and interact with the desktop of the host machine.

Basically TMD is a remote desktop software like RDP or VNC, but also (hopefully) parsec and moonlight. You can find the download here:

Since it is the first beta version, you may find some bug, crashes and lot of limitations. Note that the software is in continuous development, so most of them will be fixed as soon as possible. In the next version, there will be less limitations and more features implemented. Thanks.

TextureMind Framework – Progress #26 – Remote display protocol

Ladies and gentlemen, I'm glad to announce that TextureMind Framework finally has its own remote display protocol. The protocol has been used to create another software that is called TMD (TextureMind Desktop). The software is composed by two applications: a server running on the host machine and a client application which connects to the server. The client application exposes a simple graphics interface to make the user access and interact with the desktop of the host machine.

In the first version, you can already view the desktops screen, interact with the mouse and keyboard, with full support for Windows and Linux. Among the main features, an old-school programming with the least number of external dependencies, optimized for high performance with the least possible use of hardware resources. Furthermore, the extreme portability make it easy to use as a client / server ping-pong tool like iperf. Another strong point: it never crashes. Robust programming as well as debugging with static and dynamic code analysis have made it possible to stream video continuously for days in a row without ever crashing and a really tiny amount of memory leaks due mostly to the way memory works. Not bad for having been developed entirely by one person in a month.

Currently I'm working to improve the protocol for message transport. I'm building reliable transport on top of UDP. It will be possible to stream on both TCP and UDP protocols. After that, I will improve video codecs by adding multithreaded jpeg compression and av1 encoder / decoder with ffmpeg. I will try also to support hardware encoding and decoding, at least on Nvidia and AMD gpus. It will be possible to play games in 4K resolution at 60 fps in your LAN. For free. TMD will have its own website (ASAP) where it will be possible to find news, documentation, screenshots and downloads.

TextureMind Framework – Progress #25 – Porting on Linux

Finally I ported my entire framework on Linux. From now, all the applications that I will create for Windows will be supported for Linux too. It took a really great deal of work to achieve this. It was necessary to improve the management of external dependencies, do some builds, implement a meson-based build system for the frame and a build deployment system based on python scripts. I had to fix literally hundreds of thousands of errors and resolve several technical issues related to the differences between the two operating systems. The first build of just the common library filled a log of 3.5 million lines of errors.

I had to analyze the errors and fix them one by one. After the common library, I had to repeat the same work for all the other components, but fortunately the errors were already much fewer. I had to implement the missing parts for Linux, including all the window and event handling. I also had to refactor the whole part for font management and the way screens are presented in the window, especially the part in Vulkan. It was a lot of work, even if it took a little more than 2 weeks, I wrote more than 170 commits. Looking at the results on the video, I'd say it was definitely worth it. In the future, I will port my framework and all my works for other operating systems. The next I want to support is MacOS. I have also in my list: Raspberry PI 5, Windows on Arm, Android, iOS and Amiga OS 4.1.

TextureMind Framework – Progress #24 – Preliminary work for Remoting Protocol

Currently I'm working at the development of the TMD (TextureMind Desktop) remoting protocol. I want to reach a point where it will be possible to connect to an host machine as soon as possible. I took the chance to improve the framework architecture implementing an entire plugin system. Now it's possible to add functionalities through external modules. I added YUV conversion with I420, YV12, NV12 and NV21 pixel formats. I improved the image format so now it's possible to serialize multi-planes YUV images. I made all the rgba pixel formats endianness agnostic. I wrote an abstraction layer for video compression and I'm writing a plugin module with ffmpeg libraries to support h.264 and av1 compression. I already implemented the transport layer with support for TCP where it's possible to send messages between processes through the network with my serialization system. Now I have to implement the communication channels for the remote session, in particular display and input channels. I designed the entire architecture, where I think I made lot of improvements compared to classic remote protocols. There will be an abstraction layer to capture or control not only the desktop but also applications. Imagine to write an application for a virtual museum and you want to have multiple users connected into it: you can deliver the application in the form of SaaS. Then a channel may have two modes of communication: per client or broadcast. Just to make a valid example, the display channel is typically per client and the input channel is broadcast, but you can also extend the display channel for streaming in broadcast. Finally, I designed a smart adaptive frame rate control to avoid jittering. The first version of TMD will support only software video encoding / decoding, but future versions will be optimized for supporting the GPU, in particular NVIDIA with NvEnc and AMD with Amf.

DWorkSim v0.2

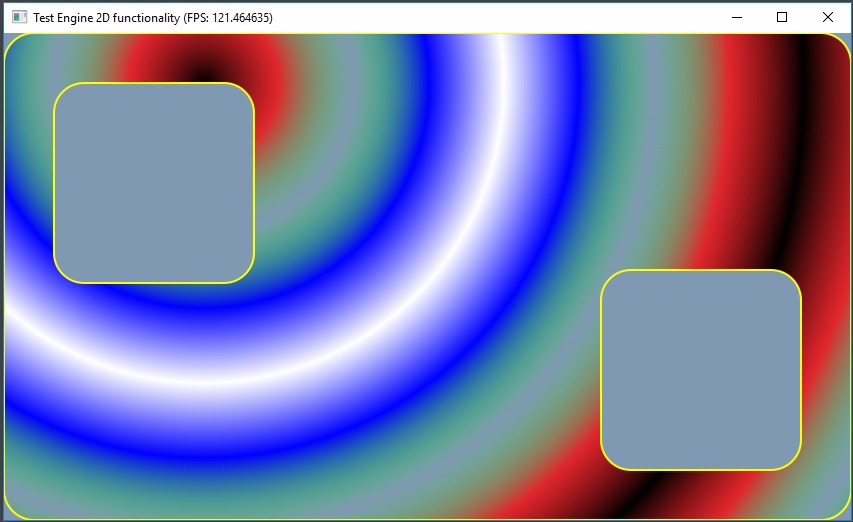

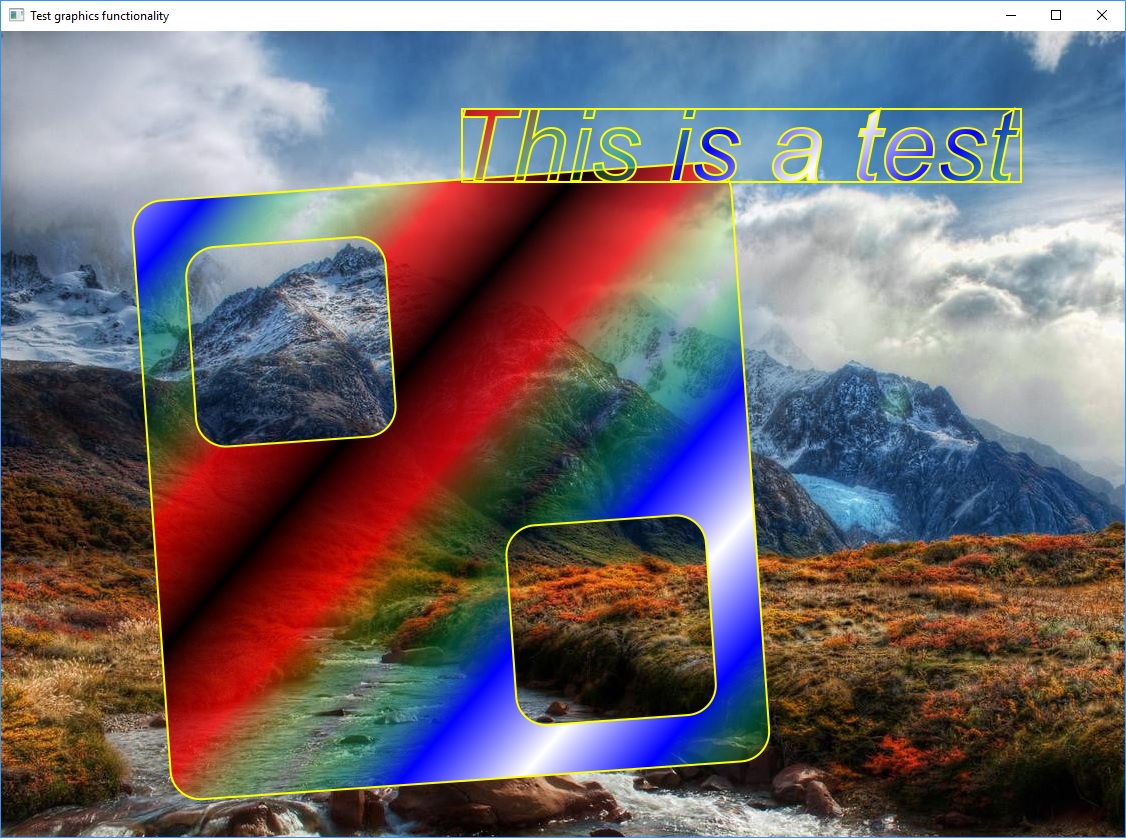

DWorkSim stands for Deterministic Workflow Simulator. It's a software created by me with my TextureMind Framework for testing image transmission in remoting protocols, like Microsoft Remote Desktop or AWS DCV. Frames are identified by the number in the top-left corner. Frames with the same number will have always the same pixels, even if they are generated in different moments, on different machines.

DWorkSim is a good alternative to raw images for instantaneous PSNR estimation during the transmission of the animation sequence. It's probably the only software in the world that allows you to do that, because the other softwares don't have support for frames generation and PSNR estimation. You can make also all the estimation you can make comparing two images, like image coherence, blurrines, pixel accuracy, color distortion and text readability. DWorkSim is now version 0.2. It can generate images to test GPU and CPU. The GPU test is different than the test for the CPU, for being more appropriate. The GPU test is performed with Vulkan libraries while the test with the CPU with the Cairo libraries. The GPU test contains two shaders taken from shadertoy:

- Full Spectrum Cyber: https://www.shadertoy.com/view/XcXXzS

- Where the River Goes: https://www.shadertoy.com/view/Xl2XRW

DWorkSim is Freeware for now, so you can use it for testing your software. For the download, visit:

DWorkSim – Deterministic Workflow Simulator

TextureMind Framework – Progress #23 – 2D geometries

I refactored all the geometry module to eliminate redundancy and to introduce new classes for advanced functionality. I re-designed my shape 2D model, for being lighter and more compatible with current standards. For example, now it's possible to create a 2D path which is compatible with SVG format. I implemented a simple parser of SVG path, so it's possible to pass directly the string to the path 2D object, then it will be converted to custom format.

In the picture above you can see an SVG path rendered with my 2D engine and Cairo libraries. I added also 2D geometric primitives, such as circles, ellipses, arcs, rounded rectangles and splines. These elements are now also part of the 2D engine, so they are automatically converted and drawn by the graphics device. I added also two component to the geometry module, which are ShapeGenerator and CollisionDetector. ShapeGenerator is a component to generate 2D shapes from few arguments and the operation between other shapes. It will be used to convert 2D paths to bezier curves, to lines or polygons, or to generate complex shapes from a math model. The CollisionDetector component will be used for collision detection between simple shapes, like points, lines, rectangles, circles and polygons. The next step is to extend these modules for the 3D world, implementing 3D geometries, collision detection and generation for lathe and extrusion objects.

TextureMind Framework – Progress #22 – OpenAL Soft

In parallel with GUI Editor, I developed the audio part of my framework. I wrote an abstraction layer to handle the AudioContext and I created an implementation of it with OpenAL Soft libraries (but it can be implemented with any other audio library). I created also an abstraction layer to handle audio tracks and sounds. Now it's possible to load a sample from ogg vorbis format and play it with the audio context. The context can play many sounds at the same time, handling the queue of sounds in flight. A sound can be consumed until the execution is terminated or played in a loop and stopped later. The audio context automatically manages the audio that are playing on the background and the others that are about to terminate. It's possible to group more sounds under the same slot to manage master volume, frequency and other parameters. For example, in a video game it would be useful to play sound effects in a slot and the music track in another slot, with a separate volume. The conversion between audio models is managed automatically. You can load a dolby surround 7.1 audio track and play it in a stereo system, and vice versa. Finally, you can also play sounds in a virtual reality, where the listener is positioned in the 3D space. The next step will be to add DSP real-time effects and to load / play music from mod file format.

300 commits in 1 month!

I'm closing the month of April with 300 commits, everything in just 1 month. I wrote most of the code for the Gui Editor application and all the missing audio part, with support for dolby surround and 3D environment.

TextureMind Framework – Progress #21 – Gui Editor

When the application will be complete, it will be forked internally to create another application called "IdealMind". With IdealMind, it will be possible to manage resources, create 2D and 3D scenes and design new applications with a programming language. The main target is to create a development environment for quick and easy prototyping of new applications, without sacrificing power and stability.

TextureMind Framework – Progress #20 – GUI is complete!

It's done! Taking advantage of the extra time I have now, I finished the GUI associated with my TextureMind framework. Compared to the previous update, I added Frame Windows, Tree Views, Tab Strips, Tool Bars and Docking Layouts. I fixed lot of bugs, improved the management of resources and events, optimized the algorithms to draw on screen and so on. One of the big improvements is in the menagement of resources. I introduced the concept of resources package. A project and its internal components may have a resources package associated on them which contains materials, textures and templates. The resources in the project is considered the main package of resources, but a single component may store an independent package of resources used for rendering itself. When the component is exported, it may save internally only the resources used for its rendering. When the component is imported, the internal resources are merged in the resources of the main project: if the GUIDs are the same, the component's resoures are recycled, otherwise the component's resources are added into the main project.

I implemented also a totally dynamic model for the loading and initialization of the resources. Previously, all the resources were loaded, stored in memory and converted into GPU memory at once. Now, only the resources used for the rendering between a number of consecutive frames is loaded, stored and converted. Additionally, you can specify a number of preloaded resources associated to a component: the resources will be kept alive only for lifespan of the component. In this way, it's virtually possible to explore terabytes of data stored somewhere, as it is in modern engines like Unreal Engine, or optimize for hardwares where the RAM is limited. Now I started to work on the GUI editor, which is at good point of development as well. The GUI editor will be used to design new interfaces and also to create new skins, with any kind of appearance. The entire GUI system is designed for applications and video games, so it must be robust for a wide range of use cases.

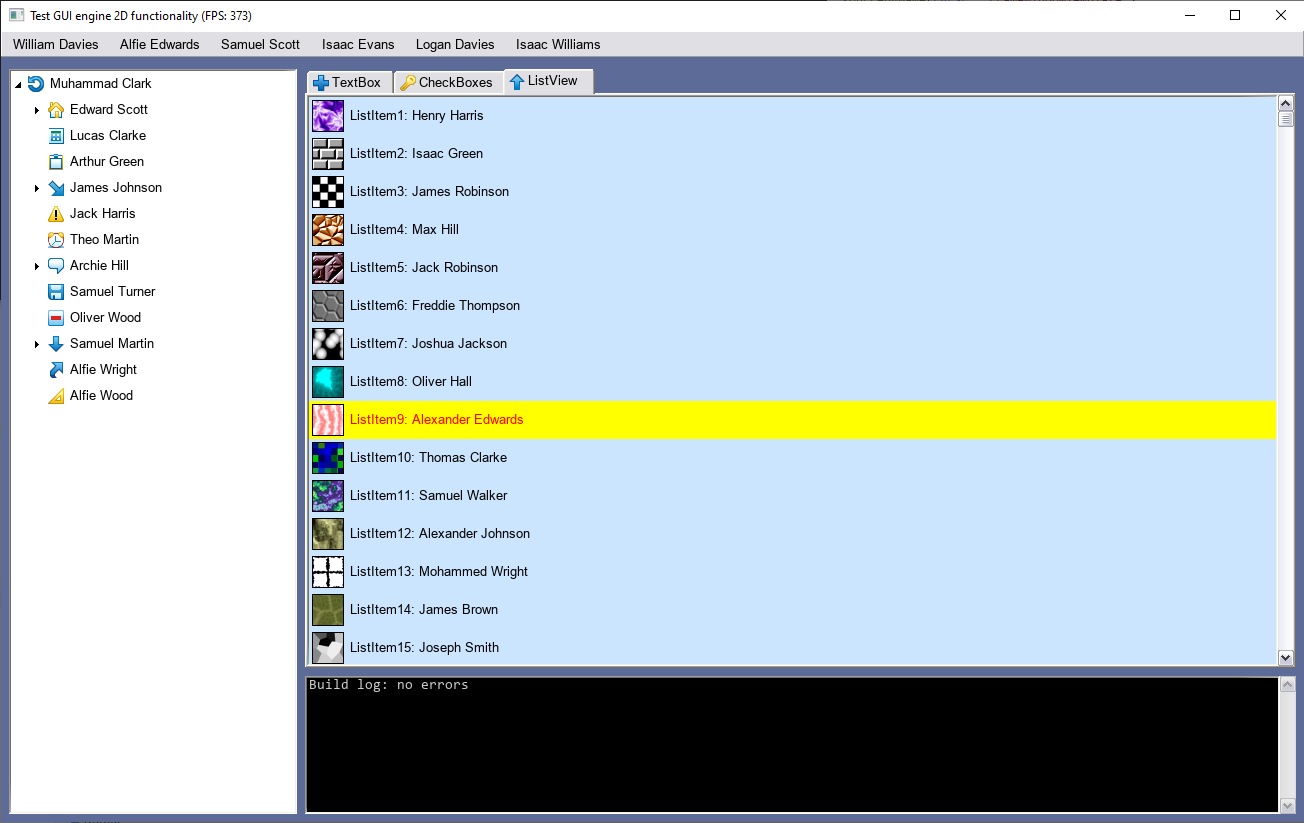

TextureMind Framework – Progress #19 – ListViews, Menus and ComboBoxes

I recently focused my efforts on the development of some widgets for displaying lists of items, in particular: listviews, menus and combo boxes. Listviews are optimized to handle hundreds of thousands of items without causing the application to slow down too much. Listviews can be configured to draw colors corresponding to different events, such as when the mouse passes over an item, when it's clicked, selected or out of focus. It is possible to select a single item or a range of items by holding down the SHIFT key, or add or subtract a single item by holding down the CTRL key.

When one or more items are selected, an event is generated by a callback system. Another interesting innovation concerns the menus. I used listviews to implement an entire drop-down menu system with a rather classic setup. Menu boxes can be opened and closed in real time. When an item is clicked, it is then reported to the application via the same callback system used for the listviews. It should be remembered that this GUI is drawn inside the screen, so these widgets will never come out of the application window but they have to adapt dynamically to best fit the available space. After implementing the menu it was possible to implement combobox widgets as well. Once you click on the combobox, a drop-down menu will open and the click will change the selected item within the box, generating an event. As for the menus, also in this case the drop-down menus will best occupy the space available on the screen during the window resize. The positioning of the drop-down menu will also take into account any geometric transformation applied to the widget that is opening the menu, repositioning it in the best way.

So I can say that the GUI is almost finished. There are a couple of missing widgets but they are not so complicated to implement. I don't think I will create other videos about GUI updates because I will focus on other features. When completed, this GUI will be used to create application and video games. The next step is to implement an editor to create GUI pages that can be used inside the applications.

PC Breathless Demo #1

I'm glad to release the first demo of PC Breathless that you can try on your own PC desktop or laptop. The minimum requirements are Windows, GPU card and Vulkan libraries installed.

You can turn around your view with the mouse and set forward with the up/down cursors, eventually go up and down with the left / right mouse buttons.

This demo is not a great evolution of the demo already published on youtube. The big difference is in the clean up and the fact that you can safely run in your pc without issues (be sure to have vulkan libraries installed). If you like this project and you want that it will be continued in the future, please make a donation to support my work. It will be appreciated.

This demo is not a great evolution of the demo already published on youtube. The big difference is in the clean up and the fact that you can safely run in your pc without issues (be sure to have vulkan libraries installed). If you like this project and you want that it will be continued in the future, please make a donation to support my work. It will be appreciated.

For now, you can see the third stage with no collision detection applied. In the future demos, I will apply an actual walk with collision detection, and the missing code to open the doors. As you can see, the graphics aspect is very simple without any lights applied, sky, characters or animated textures. That's because the 3D engine is still under development. As next step, I will introduce a new lighting system with PBR. I do not exclude that I will implement also a ray-tracing renderer in the future.

TextureMind Framework – Progress #18 – AnsiC Parser

A new AnsiC parser was born inside TextureMind Framework. I'm programming this parser from scratch, starting to implement all the standard ISO C89 features. Most importantly, the parser is designed for being extended to any other language, formal and not formal, which is the main reason why I didn't use another existing and well proven parser, like CLang. Another reason is that the parser is designed for being light-weight and anchored to the framework's internal architecture.

I implemented all the main features and now the parser is able to parse a huge variety of AnsiC source codes. I was able to parse also the donut source code and to get the AST from it:

k;double sin()

,cos();main(){float A=

0,B=0,i,j,z[1760];char b[

1760];printf("\x1b[2J");for(;;

){memset(b,32,1760);memset(z,0,7040)

;for(j=0;6.28>j;j+=0.07)for(i=0;6.28

>i;i+=0.02){float c=sin(i),d=cos(j),e=

sin(A),f=sin(j),g=cos(A),h=d+2,D=1/(c*

h*e+f*g+5),l=cos (i),m=cos(B),n=s\

in(B),t=c*h*g-f* e;int x=40+30*D*

(l*h*m-t*n),y= 12+15*D*(l*h*n

+t*m),o=x+80*y, N=8*((f*e-c*d*g

)*m-c*d*e-f*g-l *d*n);if(22>y&&

y>0&&x>0&&80>x&&D>z[o]){z[o]=D;;;b[o]=

".,-~:;=!*#$@"[N>0?N:0];}}/*#****!!-*/

printf("\x1b[H");for(k=0;1761>k;k++)

putchar(k%80?b[k]:10);A+=0.04;B+=

0.02;}}/*****####*******!!=;:~

~::==!!!**********!!!==::-

.,~~;;;========;;;:~-.

..,--------,*/

Pretty cool, isn't it? The source code has the shape of a donut and it can generate a rotating 3D donut in ascii characters. As you can see, it's not a conventional source code, it's packed and full of tricks to reduce the space, so it was a perfect test for my new parser. I was suprised to see that the parser was actually capable of parsing it, in a time of about 1.37 ms. That would be very cool to execute it now! I have in my plans to write an AST interpreter to manipulate the source code, to translate it into another language and to execute it. The AST structure can be used as well to feed a virtual machine with instructions (like LLVM) or for another compiler backend, like TinyC. The main goal is to use this parser for writing applications with a full access to the framework's functionalities.

Another goal is to implement a GLSL parser and manipulate the source code to integrate program shaders into my materials' system. It will be possible to copy & paste shaders from shadertoy into material nodes, to create super complex materials for 3D rendering.

TextureMind Framework – Progress #17 – GUI – Advanced TextBoxes

I've continued with the implementation of the framework's proprietary GUI. In this case, I implemented a new widget to handle textboxes. As you can see in the video, this is not just a text box widget to put some text into it, but a complete text editor that can be used for any purposes, from the simple notepad to programming languages.

All the main features are implemented, like unicode support, text editing, scroll-bars, multiline selection and clipboard cut & paste. The aspect of the text box can be personalized, with a background texture and different colors. You can also rotate and apply complex geometric transformation to the window. All the GUI in this video is rendered with Vulkan libraries, but it can also be rendered with the Cairo libraries.

TextureMind Framework – Progress #16 – Modules and object interfaces

After years without updates I'm glad to present probably the most important update so far. In the past, the framework was just a monolith of C++ static libraries that could be enterely or partially included within a project to access various functionalities. On one hand it was good, because it simplified the programming of the framework in its parts, on the other it started to become a problem in terms of modularity and scalability. One of the worst complications was caused by the redundancy of the static library binaries: for example, if I had to create a plugin system, each dynamic library would have to include all the binaries with the functions to manage the various components of the framework.

TextureMind Framework – Vulkan renderer showcase

I created a video to show the potential of the Vulkan renderer included in my framework. Now the engine can import 3D models from several 3D formats (including collada) and render them with Vulkan libraries.

The engine is equipped with a proprietary material system and mesh format. The materials of the imported models are converted to the engine's materials format which is then converted into the shaders that are used to make them work. As you can see from the video, the engine is already capable of rendering millions of triangles at high framerate and resolution (3840x2160).

TextureMind Framework – Progress #15 – Vulkan – Bitmap Text Rendering

Now the engine in Vulkan can draw text with bitmap rendering. The engine checks which characters are on the screen and it creates dynamically the textures only for the fonts to be drawn, with the correct size and aspect.

The characters are precalculated into bitmap with the FreeType library and loaded into Vulkan textures only if needed. When the text is not rendered, the font is deallocated to reserve space for other resources. In this way, fonts can be drawn as normal polygons with textures, without an important impact on performance. The algorithm for text rendering is capable of drawing text with different indentation formats, including the "justified" one that you see in this video, like in any other word processor. The GUI is drawn with the GPU and it can be used for video games or 3D applications which require advanced performance and functionality.

TextureMind Framework – Progress #14 – Vulkan – Skinned mesh

Skinned mesh rendering is a fundamental part of every modern 3D engine, so I couldn't avoid to implement it. The skinned mesh with weights, indices, bones, skeleton, and animated nodes is imported with AssImp library into my format. I added weights and indices to the vertex attributes while bones matrices are written into a shader storage object. The skinned mesh is computed with the GPU, by the vertex shader.

In the video you can see the final result of the implementation. The model has been imported from Doom 3 format into my format, then animated and rendered by the 3D engine. For now, the keys with quaternions are interpolated with a slerp for every frames. An optimization can be to pre-calculate all the bones into a SSBO at fixed frame rate (like 60 fps) and use it to render a massive amount of meshes.

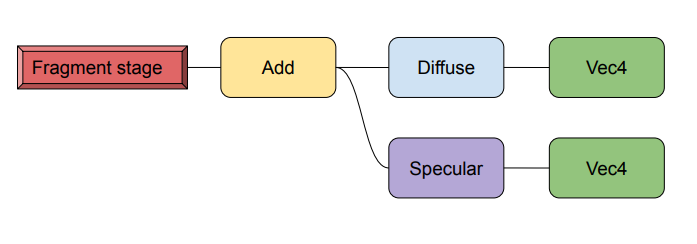

TextureMind Framework – Progress #13 – Vulkan – Advanced materials

I improved the material system, introducing the lighting stage. I removed the fragment stage and replaced it with color and lighting stage. The output color is calculated as the sum of the color stage and the lighting stage. The color stage has only one material node as input that is used to produce the output color for this stage.

The lighting stage wants more inputs, like ambient, diffuse (or albedo), specular, roughness, metalness, that are mixed in a physical based rendering (PBR). Each input is connected to one material node that can be the result of the operation between more material nodes, so every stage can have it's own textures or the math operation between more textures, uniforms and constants. In the video you can see a model with advanced materials imported to show the benefits of the last optimizations. In this case, the ambient stage is rendered correctly and mixed with the diffuse textures.

TextureMind Framework – Progress #12 – Vulkan – Import materials and normal maps

Now the importer with AssImp library is capable to import model materials and textures into my format. I added also support for normal maps with tangent and bitangent vertex attributes, improving the lighting stage in the fragment shader to render it properly.

In the video you can see nano suit model imported from collada format. As the object rotates, you can see the benefits of bump mapping and specular textures.

TextureMind Framework – Progress #11 – Vulkan – Import 3D model

I decided to use AssImp library to import models from other formats to my 3D mesh format. The video shows a first implementation of the importer.

Vertices and normals are converted along the skeleton structure, while the red material is generated just to render the model on the screen. The next step is to load the materials and the associated textures.

TextureMind Framework – Progress #10 – Vulkan – Materials and 3D rendering

Finally, the very first 3D model rendered by the 3D engine. Even if it looks like a simple torus demo, the main feature this time is the format used for the 3D mesh and the convertion from material nodes to vulkan shader, for the rendering.

The mesh is composed by a polygon hull, a set of vertex attributes and a layout that defines the nature of vertex attributes. The polygon hull represents the geometric structure of the mesh while vertex attributes define the graphics and the physical aspect. A mesh can have virtually any number of vertex attributes, that can be: position, normal, colors, texcoords and other new attributes used by the material.

Materials are composed by expression nodes, then converted to shaders in a second step. Every material has a layout with the number of vertex attributes required for the rendering. The material structure used to render this model is the following:

The layout of the mesh doesn't have to match exactly with the material's one: if the mesh has the required vertex attribute then it's used, otherwise 0 values are used instead. It's for the material to decide how to use the vertex attributes offered by the mesh. In this way, a single material can be used to render any kind of mesh. Of course, a mesh without normals cannot render diffuse or specular, or without texcoords cannot render textures, normal maps and so on.

The layout of the mesh doesn't have to match exactly with the material's one: if the mesh has the required vertex attribute then it's used, otherwise 0 values are used instead. It's for the material to decide how to use the vertex attributes offered by the mesh. In this way, a single material can be used to render any kind of mesh. Of course, a mesh without normals cannot render diffuse or specular, or without texcoords cannot render textures, normal maps and so on.

Uniform buffers can be used by a single mesh to change the material content, like colors or texture coords. For instance, the color of diffuse in this material can be connected to a uniform contained by a 3D mesh, that can be changed on the fly, changing the color of the object. In this way, it's possible to reuse the same materials for multiple objects, even with different aspects, like particles or game characters.

TextureMind Framework – Progress #9 – Vulkan – Materials and textures

I improved the implementation of materials and textures with Vulkan. Now every material is translated into a GLSL shader that is converted into SPIRV code with shaderc library. The shader is generated along the graphics pipeline to match the material settings. For now materials are very simple and used to draw an image texture with alpha blending or a filled color.

As you can see from the video, now the gui has normal appearence instead of rainbow rectangles of before. The next step is to support path rendering and font rendering, for drawing the text. In the future, the same materials system will be used to draw 3D content too.

TextureMind Framework – Progress #8 – Graphics context and 2D GUI with Vulkan

I am happy to announce that Vulkan library has finally been integrated into my framework. For the moment nothing complicated, I limited myself to implement a specialization of the Graphics Context that draws simple colored rectangles instead of the images drawn by Cairo library. It's possible to invoke drawing commands with the same degree of complexity and practically identical management of textures, materials and uniforms, at programming interface level.

Each rectangle is associated with a transformation matrix, which is translated into a uniform buffer. It's also possible to rationalize the rendering into multiple layers, allowing the reuse of command buffers with a minimum programming effort.

As you can see from the above image, the 2D GUI based on the graphics context worked quite well. It's possible to drag the windows and see them move on the screen at a high framerate, which is the main purpose for which it's worth bothering the Vulkan libraries.

For the moment there is an implementation of textures and materials, but I have not yet finished the rendering part at shader level. The difficulty lies in the fact that the framework must resolve the material nodes to extract the proper GLSL shader to be converted into SPIRV, create a suitable graphics pipeline and set it before rendering. The next step is to finish this part and make the 2D GUI identical to Cairo version.

Then I can proceed implementing 3D functionality, with a full material management. The main goal is to implement an importer with assimp library and load 3D models. Then I will proceed refining the 3D functionality with a sophiticated engine optimized for modern real-time computer graphics.

TextureMind Framework – Progress #7 – 2D GUI with Cairo

Finally I came to a first working version of the 2D GUI based on the Cairo libraries. The entire GUI architecture is based on 2D Engine components like the graphics and the physics engines. The graphics engine makes use of graphics context that in this implementation is based on Cairo, but it can be specialized with any library.

As you can see in the video, I reused some old skin from WindowsXP, but the skin is totally programmable and it will be changed in the future. For now there are only simple widgets like: form windows, buttons, options and check boxes. The next step is to implement other composed widgets like scroll bars, text boxes, tabs, lists, treeviews and so on. This GUI can be used for video games or to produce professional applications. The GUI is designed to run on full screen or using the widgets of the operating system. The full screen variant can be specialized to work with GPU libraries, like Direct3D or Vulkan. As a modern feature, a transform matrix can be applied to every widget, so they can be translated, rotated, scaled or skewed with matrix operations. The interface can be designed with an external editor and not with code embedded inside the application. The only code required on the application side is the one used to manage the widget events.

TextureMind Framework – Progress #6 – 2D Engine and assets

Having a graphics context to draw something on the screen is not enough when you have to deal with complex scenes made of many textures, materials, shapes and assets of any kind. This is the reason why at some point of my framework development I introduced the concepts of Scene, Engine and Resources. Basically, a scene is a collection of elements, that can be 2D or 3D objects like shapes or meshes, while the Engine is a component used to handle the scene and resources is a set of textures, materials and assets. All these kind of resources are referenced by elements with UUID strings.

I implemented different kind of Engines. The 'Generic' Engine is used to pre-process the scene to prepare it for the rendering or eventually for other kind of operation, like collision detection. When the generic engine iterates over the scene, all its internal geometries are transformed for being placed on the screen. The 'Graphics' Engine translates the transformed scene into a series of draw commands for the graphics context. The picture of above shows a simple test of the Engine, with an element that is a 2D shape composed by three sub-paths (1 contour and 2 holes), with a radial texture material for fill and a color material for the external stroke. Even if this test is simple, the Engine is designed to handle far more complex scenes and it will be used to create a whole 2D GUI from scratch.

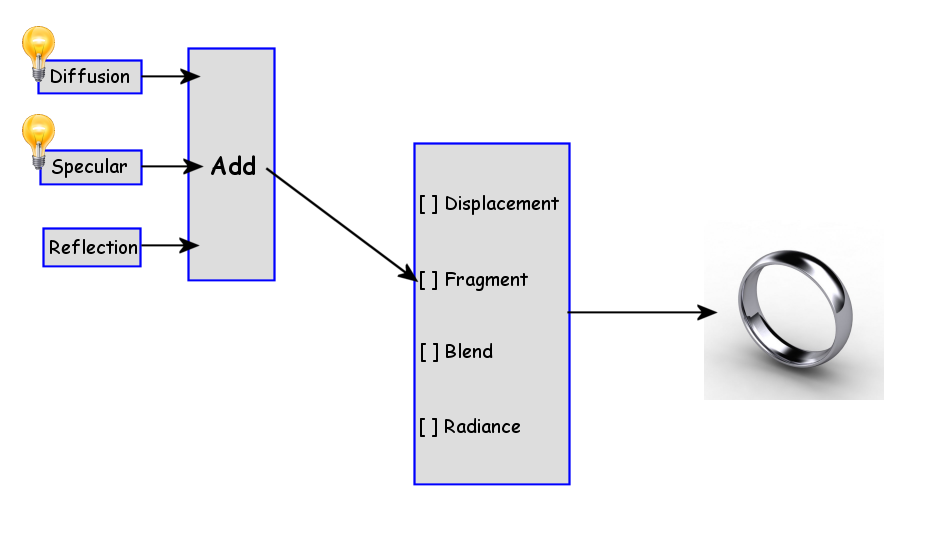

TextureMind Framework – Progress #5 – Materials and path rendering

In my framework, I implemented materials for being extremely scalable. First of all, I decided to abandon the old format similar to 3D Studio Max or Maxon Cinema 4D and adopt another format more similar to UE4 that is based on Visual Expression Nodes, where one node in this case is called "material component".

A material is composed by different stages: displacement, fragment, blend and radiance. Every stage has parameters and a single component in input, that can be a texture with texture coords, diffusion with lights and normals or the combination of more components with "add" or "multiply" nodes.

If program shaders are supported by the graphics context specialization, the material is translated into a program shader, otherwise it will be rendered as best as possible, with the component types supported by the graphics library. Continue reading

TextureMind Framework – Progress #4 – Windows and Cairo graphics context

I implemented a set of classed to handle system windows and events. Now it's possible to open a window and draw an image inside. I also programmed an abstract class for graphics context to handle graphics functionality in common with the most important graphics libraries, like DirectX, OpenGL and Vulkan, even if the first specialization of the context is making use of Cairo library to support via software rendering.

The abstraction layer makes the context compatible to the feature available from the graphics library that is specializing it. For example, Cairo has support for linear and radial patterns and path rendering, but no other patterns can be programmed with program shaders. If not supported by the library, some features is returne as not-supported by an enum function exposed by the abstract class. In this way, the component that is using the rendering context is aware of the features that are available and can make the best use of them. The image shown by the example, is a demo written with the specialized class that makes use of Cairo library, with linear pattern and path rendering.

TextureMind Framework – Progress #3 – Graphics context and external libraries

One of the most important component in a framework is a cross-platform loader of dynamic libraries. Without it, you cannot access to the functionality of external dynamic libraries like OpenGL, DirectX or Vulkan, or at least you may have to add extra code for every library on every platform you have to support. In some cases it's better to not statically link a dynamic library and use LoadLibrary() or dlopen() instead. With this component, I don't have to worry how the library is linked and what platform or operating system I'm about to support, the effort of loading and linking an external library is very little. After that, I decided to use this component to dynamically link DevIL and implement a full support of image conversions with this library. I implemented also a full set of classes to handle 2D shapes and 3D objects.

Another fundamental component for every 2D or 3D engine is the graphics context. In my framework, a graphics context is an abstraction layer of functionality exposed by the rendering context of a graphics library, like OpenGL or Direct3D. Once I defined a full set of draw commands for drawing 2D shapes and 3D objects, I made a first specialization of this interface using the Cairo library with path rendering for drawing 2D graphics only.

TextureMind Framework – Progress #2 – Improve serialization and math classes

Even this framework has been designed for generic purposes, it will be used to program basically graphics applications. In this perspective, I implemented a full set of serializable classes to handle complex numbers, vectors and matrices and all the geometric operations that will be used to realize a 3D engine.

To serialize some enum variables that want constants instead of numbers, I introduced "constant strings" (i.e. LEFT, GREATER, NULL) in human readable formats like xml or json. In this case, when the variable is deserialized by the framework, a constant string will be translated into his respective numberic value, on the contrary the numberic value will be translated into his constant string during the serialization process.

For instance, an extended vector 2D with anchor variables:

enum PositionAnchorEnum {

TMD_POSITION_ANCHOR_LEFT = 0,

TMD_POSITION_ANCHOR_RIGHT = 1,

TMD_POSITION_ANCHOR_TOP = 2,

TMD_POSITION_ANCHOR_BOTTOM = 3,

TMD_POSITION_ANCHOR_NEAR = 4,

TMD_POSITION_ANCHOR_FAR = 5

};

template <class T>

class ExtVector2 : public Vector2<T>

{

public:

[...]

T m_x:

T m_y;

PositionAnchorEnum m_xAnchor;

PositionAnchorEnum m_yAnchor;

};

[...]

ExtVector origin;

origin.m_x = 0;

origin.m_y = 0;

origin.m_xAnchor = TMD_POSITION_ANCHOR_LEFT;

origin.m_yAnchor = TMD_POSITION_ANCHOR_TOP;

is saved to:

<origin x="0" y="0" xAnchor="LEFT" yAnchor="TOP" />

TextureMind Framework – Progress #1 – Serialization and log

I continued to program the TextureMind Framework and I'm pretty happy with the result. I wish that this framework will give me the chance to increment the production of my software and to save most of the time (because I don't have it). People told me many times to use already existing frameworks to produce my works, and I tried. Most of them are not suitable for what I want to do, or maybe they have issues with the licenses or simply I don't like them. I want to make something new and innovative, and I feel like I'm about to do it.

- Serialization

Let me say that the serialization is a master piece. You can program directly in C++ new classes with a very easy pattern, save and load all the data into four formats: raw (*.raw), interchangable binary (*.tmd), human readable xml (*.xml) and json (*.json).

Unrelated Engine – Deferred Rendering and Antialiasing

I tried to implement the explicit multisample antialiasing and I got good results, but it's slow on a GeForce 9600GT. A scene of 110 fps became 45 fps with only four samples, just to point out the slow down. While I was jumping to the ceiling for the amazing image quality of a REAL antialiasing with deferred shading (not the fake crap called FXAA) I fell down to the floor after I seen the fps, what a shame.

Anyway, I decided to change from a deferred shading to a deferred lighting model just to implement a good trick in order to use the classic multisample (that in my card can do pretty well also with 16 samples!) reading from the light accumulation buffer in the final step and writing the geometry to the screen with the antialiasing enabled. The result is a little weird, but you can fix it by using that crap fxaa on the light accumulation buffer which is smoother than the other image components. For example, I can use: a mipmapping or anisotropic filtering to eliminate the texture map aliasing, a FXAA to eliminate the light accumulation buffer aliasing and finally a MSAA to eliminate the geometry aliasing.

ps: I used the nanosuit model from this site: www.gfx-3d-model.com/2009/09/nanosuit-3d-model/

TSREditor – Last screenshots

TSREditor is a huge editor in the style of Blender3D projected to create or edit the resources like textures, 3d models, sound and levels for games and other stuff.

In spite of the large amount of work needed to reach a decent version of this software, I decided that it will be free as a part of my Unrelated Framework. In this moment I'm far from a decent beta version to release, but I can show you two nice screenshots of the program at work.

Unrelated Engine – Work in progress

This is the first "work in progress" video of my 3d engine called Unrelated Engine. Some complex animated models come from Doom 3. They were converted to a maximum of 4 weights per vertex as well as the identical vertices have been cancelled to improve the speed. The shader language was used to obtain a large amount of skinned meshes and complex materials with a reasonable speed.

The 3d models are from doom3 and from http://www.models-resource.com/, they were used only to test my engine and to make this video. The rights of these 3d models and the music are reserved by their respective authors.

Unrelated Engine – A nice test with Mario 64 map

I created a nice video with an engine that I'm still developing and that is part of my Unrelated Framework. It uses:

- OpenGL (to draw the graphics)

- DevIL (to import images)

- Assimp (to import 3d models)

The 3d models are from http://www.models-resource.com/, they were used only to test my engine and to make this video. The rights of these 3d models and the music are reserved by their respective authors.

Unrelated Framework – Inclusion of OpenIL/DevIL

OpenIL is a library with very powerful image loading capabilities and I decided to include it in my framework. My standard can handle several image formats like the classic 8 bit RGB or more advanced formats like 16 bit RGB or High Dynamic Range. The images are imported from file and used maintaining the original format (if it's possible). For other feature check OpenIL official web site (http://openil.sourceforge.net/)

| Supports loading of:

* Windows Bitmap - .bmp |

Supports saving of:

* Windows Bitmap - .bmp |

This is an example of how it works in my framework:

C_Image *newImage = new C_Image();

//load from file

newImage->ImportFormFile("test.jpg");

newImage->ImportFormFile(UF_FILE_JPG, "test.jpg");

//export to file

newImage->ExportToFile(UF_FILE_HDR, "test.hdr");

//set file format jpeg compression

newImage->SetFileFormat(UF_FileFormat_JPG(50)); //the image will be saved in my format with a jpeg quality of 50

newImage->SaveToFile("test.img");

Gianpaolo Ingegneri

Copyright @ 2010 – All right reserved

Unrelated Framework – Inclusion of ZLib

The Unrelated Framework got support for ziv-lempel compression of data using ZLib. The classes can be compressed in memory, loaded and saved with few rows of code and without limits. For example:

//this works only with resources like images, fonts, sounds, etc...

C_Image *newImage = new C_Image();

newImage->ImportFromFile("test.tga"); //load image from file

newImage->SetFileFormat(UF_FileFormat_Zip(6)); //set a zip file format of 6th level

newImage->SaveToFile("test.img"); //save the zipped resource on file, simple isn't it?

//if you want to load...

newImage->LoadFromFile("test.img"); //the system understands that it was zipped

newImage->SetFileFormat(NULL); //set it NULL if you don't want the zip compression in the future

or

//this can be used for every kind of object

C_Image *newImage= new C_Image();

newImage->ImportFromFile("test.tga"); //load image from file

C_Object_Zip *objZip = new C_Object_Zip(); //init a zip container

objZip->CompressObject(newImage, 6); //compress the image object

objZip->SaveToFile("test.zob"); //save the zipped object on file

delete newImage;

//if you want to load...

objZip->LoadFromFile("test.zob"); //load the zipped object from file

newImage = (C_Image *)objZip->UncompressObject(); //get the uncompressed object

delete objZip; //delete and free the zip object memory

Gianpaolo Ingegneri

Copyright @ 2010 – All right reserved

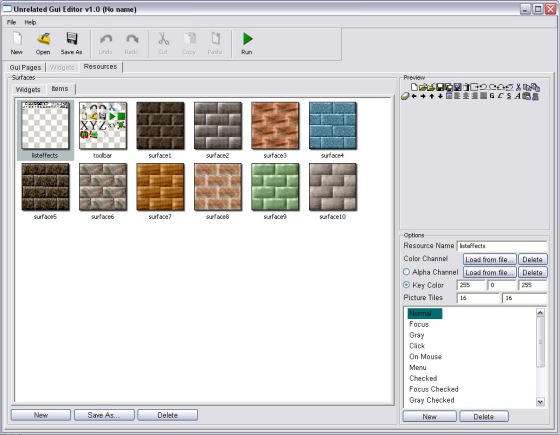

Unrelated Framework – Gui and Gui Editor

Finally I completed the GUI of my framework. The best feature is that the Gui can work in two modes: via software or using the OpenGL. It's very helpful for cross platform compatibility, for video games or other OpenGL purpose.

The Gui is in his first version but it has all the widgets necessary to create professional applications. An interesting feature is that you don't need to program a single row of code to create particular interfaces: with the Gui Editor you can easily project all kind of professional interfaces and load them in your program using few functions. You don't need to code the widgets to make them work properly. In this way you can save hours of programming.

There is a full list of widgets implemented:

- Button

- Radio button

- CheckBox

- Form

- Frame Window

- FrameBox

- PictureBox

- ScrollBar

- Scroll space

- TextBox

- ComboBox

- Menu

- ListView

- TreeView

- ToolBar

- Image button

- Graphic Api Viewer

Of course the GUI was coded in C++, it's object oriented and it's an integrative part of my Unrelated Framework.

Gianpaolo Ingegneri

Copyright @ 2010 – All right reserved