After considerable effort, I managed to extend the number of Linux distributions supported by TextureMind Framework and all the applications built with it, including DWorkSim and TMD. Initially I thought of supporting a fixed number of distributions, namely CentOS 7, CentOS 8, Rocky 9, Ubuntu 20, Ubuntu 22 and Ubuntu 24. After a week of work, I figured out how to achieve an amazing result: compiling on just one distribution and running the applications on all the others, from glibc 2.17 onwards. Thanks to the framework's design and the minimum external dependencies, I achieved this incredible result. It will simplify a lot the entire build process, because I can deploy everywhere on Linux with just a single build. As an immediate effect of this great feature, from now on it will be possible to download Linux applications from a single package to see it run on any distribution released in the last 10 years or more, from glibc version 2.17 onwards.

Author Archives: admin

News about TextureMind, TMD and projects

I have news about recent development of TextureMind and its projects. This post is a correction of the last post, where I announced that there was the chance for TextureMind to become an actual company. Well, not this time. I decided not to rush things too much, so I will continue to develop projects for TextureMind activity like the way it is now. I decided also to slow down a little bit the development of TMD (TextureMind Desktop) and I'm mostly oriented to release the product for free in a near future and not as a commercial product. I think that for such a young software the important thing is to increase its credibility being tested by the greatest number of users worldwide. Making it a commercial product so soon would mean confining it to a niche of users, condemning it to a lack of visibility and making it susceptible to strong speculation by third parties. I have evaluated several options and I do not feel that most of them are very consistent with my original goals. As I've said in the past, one of the main mottos of TextureMind will be "no greed for money", so I intend to stick to these principles to the core. TMD needs to be known, tested, appreciated. Having paying customers is a self-fulfilling prophecy. I don't want to run the risk of being stuck in a maintenance service with daily customers and tickets, just to scrape together a monthly salary. Making money is just a number that increases in a bank account, and I don't want to sacrifice my life and my opportunities for this anymore. I want to create something beautiful, useful, recognized. Users should be able to use TMD for free and test it to find bugs, so they can report them and I can fix. That's why I decided that TMD will be free in its first official version, because the alternative doesn't seem exciting to me at all. This choice will be the best to increase the quality of the product and spread it as much as possible.

TextureMind Desktop V0.2 (beta)

As I promised, today I released the very first version of TextureMind Desktop. If you don't know what I'm talking about:

TextureMind Desktop (TMD) is a software to allow remote access to a personal computer's desktop (the host machine) from a client device. It is composed by a server running on the host machine and a client application which connects to the server. The application exposes a simple user interface to make the user access and interact with the desktop of the host machine.

Basically TMD is a remote desktop software like RDP or VNC, but also (hopefully) parsec and moonlight. You can find the download here:

Since it is the first beta version, you may find some bug, crashes and lot of limitations. Note that the software is in continuous development, so most of them will be fixed as soon as possible. In the next version, there will be less limitations and more features implemented. Thanks.

Planned release of TextureMind Desktop for 6 January 2025 will be a beta version

This release will be just a beta version with a simple implementation of the TMD protocol. It will be composed by two applications: a server and a viewer. The server can be manually launched in the host machine via the command prompt with admin rights. The viewer connects to the server in the host machine. For now, the software is portable and it won't handle sessions with system rights. All these limitations will be removed in the next releases.

This is a list of the limitations within the first beta version:

- No sessions, agents or system rights

- Portable, no installer

- No SSL connection

- Only via software encoding and decoding

- Linux: only Ubuntu24

- Only default settings

However, you will be able to connect to a remote machine and test the new protocol as you wish. It can start on any version of Windows from 7 or greater (Windows 10 or greater is suggested).

What I will use to create a competitive environment for TextureMind products, in particular TMD

The end of the year is tomorrow now and I will continue to work at TextureMind products as my regular job. After 13 years in the field of power computing and the last 9 years in AWS, I'm slowly building a competitive environment for the development of my software, with the best standards in my mind. I have to guarantee that my software will run on most of the target platforms without bugs or crashes, so I need to work with the most relevant hardware and operating systems. Having just one PC desktop with windows and any graphics card is not enough.

I already have a PC desktop with Windows 10 Pro and GeForce RTX 3070 that I'm using to develop my software, in details:

- Motherboard: ASUS ROG STRIX B550-F GAMING

- CPU: AMD Ryzen 9 3900X, 3800 Mhz 12 core, 24 threads

- GPU: MSI GeForce RTX 3070 Ventus 2X OC, GDDR6 8 GB

- RAM: Corsair Vengeance 32 GB DDR4-3200

- Storage: Kingston 2.5" A400 SSD 960 GB

Recently, I've bought a HP Pavilion Plus 14 with Windows 11 Pro for supporting AMD graphics cards, in details:

- CPU: AMD Ryzen 7-7840U 3,3 GHz

- GPU: AMD Radeon 780M

- Screen: OLED with 2.8K Resolution (2880x1800)

- RAM: 16GB LPDDR5x 6400 MHz

- Storage: 1 TB SSD

To cover high resolutions like 4K, I have an ASUS TUF Gaming VG28UQL1A Monitor at 144 Hz. For supporting multiple monitors and even higher refresh rates, I ordered an outstanding LG UltraGear OLED 32GS95UE Monitor, with a max refresh rate of 240 Hz in 4K and 480 Hz in full HD in dual mode. The last setting will be particularly useful for TextureMind Desktop (TMD), a display remote protocol where multi-monitor support and high refresh rate is mandatory.

I have in my plan to buy a second PC Desktop with more advanced features, like:

- Motherboard: ASUS ROG STRIX B650-A GAMING

- CPU: Amd Ryzen 9 7950X 4.5 GHz

- GPU: GeForce RTX 4080 or 50X0

- RAM: 128 GB DDR5 5600 MT/s or higher

- Storage: Lexar NQ790 4TB PCIe 4.0 M.2 NVMe 1.4 7000 MB/s

That will be useful for testing modern GPU applications, like Unreal Engine 5, and for producing features for advanced hadware.

I will install both Windows and Linux on PC and makes use of VMware virtual machines for testing the most relevant operating system distributions. Then I will port my framework and all the software to MacOS, so I will need to buy at least a mini mac M2 for supporting ARM processors and an older mini mac for supporting intel processors. I will buy also a Raspberry PI 5 and an iPhone for iOS, while for Android I will use my current smart phone.

For professional cloud computing, I will use instances in both Amazon EC2 and Google Cloud Platform. This will be particularly useful for the development of TextureMind Desktop, since this kind of software is mostly used with remote virtual machines in a cloud service.

TextureMind Framework – Insights #5 – Serialization in TBF

TBF stands for TextureMind Binary Format and it's the format used to serialize binary data in the TextureMind Framework, as alternative to xml and json. TextureMind Framework has an entire serialization system with an abstraction layer that can be specialized with different kind of implementations, in human-readable text format or binary format. The built-in formats are:

- xml

- json

- raw

- tbf

All these formats are wrapped by the same abstract interface inside the framework. You can serialize a large set of types:

- Primitive types: bool_t, uint8_t, int8_t, uint16_t, int16_t, uint32_t, int32_t, uint64_t, int64_t, float, double

- Strings of any size

- Data structures of any kind

- TextureMind Objects

- Containers of objects

You can serialize the content of single variables or multiple variables with a single call. You can save data in human readable text formats like xml or json, or in binary format to save CPU and space. All the formats implement principles of backward / forwards compatibility, except for raw data. With raw, you need to make sure that the writer and the reader share the same version number. Raw is very fast and keeps the serialization of complex data structures, like the objects or container of objects, without the burden of being compact or compatible between different versions. It has been programmed to guarantee a way for high performance client / server transmission of very large amount of data. All the formats are byte order agnostic and the byte order of reference used in binary formats is little endian (on big endian machines, values are converted into little endian, even if there is still a way to bypass the convertion for performance reasons).

TextureMind Binary Format (TBF) has been designed to guarantee a compact and fast binary format with strong principles of backward and forward compatibility, like Google Protobuf. It has been used for client / server transmission of messages and all the native formats in the TextureMind Framework for saving images, sounds, gui pages, 2D and 3D models. It can be used to serialize a large amount of data, virtually with the size limit of a 64 bit value, so you can save 3D models with billion of polygons and vertices. It's optimized for being fast and compact: integer values are stored in base128 and the fields are not tagged for every recurrence inside the data structure: they are deserialized thanks to a special system based on reading keys. You don't need to keep numeric tags like in protobuf, but just the variable names. Unlike protobuf, you don't need to build source code for every data structure in your project. You can also compress data with ZIP or LZ4 inside a serialized structure, or transport data files in native format without changing the original content (for example, you can transport an image in jpeg or a music in protracker format).

TextureMind Framework – Insights #4 – Use of VMware to increase compatibility

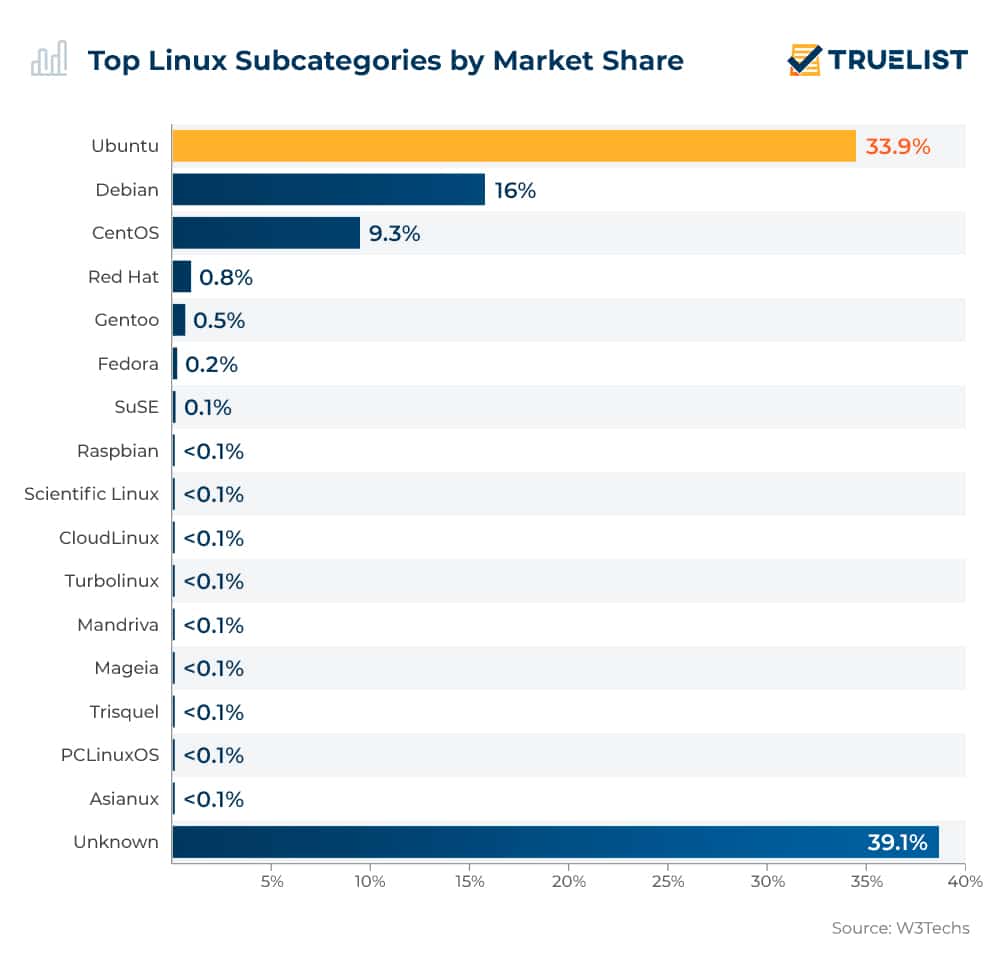

From November 11 2024, VMware Fusion and VMware Workstation are now available for free to everyone for commercial, educational, and personal users. These are very good news because now I can use VMware to improve the compatibility of TextureMind Framework and all the derived software with different kind of Windows versions and Linux distros. Currently, the framework has an experimental porting for Linux which is compatible only with Ubuntu24 (you can already download DWorkSim 0.2 and test it). I will setup different virtual machines to extend the compatibility to the most relevant Linux distros in the market, like Debian, Rhel, Rocky, Centos, Sles and Gentoo, following this chart:

With virtual machines available, it will be alot easier than before. I already have a strategy to increase the range of supported distros for the framework and the derived software. Basically, I will create builds with old distros to cover also the latest ones (thanks to backward compatibility). The aim is to make it possible to download a single zip with the software and make it run without installing anything else. The virtual machines will be used to extend the support for different versions of Windows as well.

TextureMind and all its activities will never be sold to any company

This is the biggest claim that you should be looking for. In a world where computer projects are created almost with the purpose of being sold in the future to big companies like Microsoft, Google, Amazon, Meta, I declare that I will never sell TextureMind Framework and all the products to any company that might be interested in purchasing them in the future. This work is getting better and better and in less than 2 months it will become my real full time job. I will never sell my business, not even for 100 billion dollars. No big company will ever make of my work its personal playground. The software created using the framework will always be mine alone and will be distributed and possibly sold by me. It will never become the half-mutilated service of a large company or the whim of some billionaire. The project will always be the same and will remain faithful to its original intentions. This should interest you a lot, because this way my work will never lose its integrity and will not be corrupted by the needs of making money typical of billion-dollar companies.

If you like my works and intend to donate, know that this money will never be lost for the creation of a work that will betray you in the future just to make money. If you donate, you will help the creation of one of the few survived works in the world that are made for passion and not for money.

There will be some big news coming soon. On January 6, 2025, the first working version of TextureMind Desktop, a remote display protocol, will be released. With this software, you will be able to connect to a remote workstation and play games or run 3D applications at 60 fps, for free. I'm also working on a bunch of other software, including a GUI editor, a 2D scene editor, a 3D scene editor, a full development environment, a paint application for creating textures and images with AI, a video editing application and more.

TextureMind Framework – Progress #26 – Remote display protocol

Ladies and gentlemen, I'm glad to announce that TextureMind Framework finally has its own remote display protocol. The protocol has been used to create another software that is called TMD (TextureMind Desktop). The software is composed by two applications: a server running on the host machine and a client application which connects to the server. The client application exposes a simple graphics interface to make the user access and interact with the desktop of the host machine.

In the first version, you can already view the desktops screen, interact with the mouse and keyboard, with full support for Windows and Linux. Among the main features, an old-school programming with the least number of external dependencies, optimized for high performance with the least possible use of hardware resources. Furthermore, the extreme portability make it easy to use as a client / server ping-pong tool like iperf. Another strong point: it never crashes. Robust programming as well as debugging with static and dynamic code analysis have made it possible to stream video continuously for days in a row without ever crashing and a really tiny amount of memory leaks due mostly to the way memory works. Not bad for having been developed entirely by one person in a month.

Currently I'm working to improve the protocol for message transport. I'm building reliable transport on top of UDP. It will be possible to stream on both TCP and UDP protocols. After that, I will improve video codecs by adding multithreaded jpeg compression and av1 encoder / decoder with ffmpeg. I will try also to support hardware encoding and decoding, at least on Nvidia and AMD gpus. It will be possible to play games in 4K resolution at 60 fps in your LAN. For free. TMD will have its own website (ASAP) where it will be possible to find news, documentation, screenshots and downloads.

TextureMind Desktop – Release date 6 January 2025

Planned release date for TMD (TextureMind Desktop) is 6 January 2025. The software will be like VNC but studied to deliver performance, high resolution with high framerate. It will be composed by two applications: the server and the viewer. The usage will be simple: launch the server on the host machine and use the viewer for connecting to the server. There will be support for display, input and audio, on Windows and Linux. Hopefully hardware encoding and ssl. It will be free, so you can test it as much as you want.

TMD will be mostly oriented for high responsiveness scenarios, like videogames, but also professional applications like CADs or text editors. There will be two streaming modes: Best quality vs. Best framerate. Best quality will try to maximize the quality, reducing the framerate in case of network congestion. On the contrary, best framerate will try to keep constant high framerate reducing quality, always in case of network congestion. Moreover, you will be able to try other client-side settings to maximize your experience. It will be possible to change the codec used for video streaming in real-time, enable / disable quality updates, select the codec for quality updates, enable / disable hardware decoding, limit network bandwidth and so on.

TMD will have its own web site, with pages for documentation, screenshots, download area and support. I will open a freshdesk site, so you can open a ticket for the issues you will find during your tests.

TextureMind Framework – Insights #3 – Basic principles

- Few dependencies. Most modern applications are gigantic bundles of third-party dependencies. While the adage “avoid reinventing the wheel” may be true, it is also true that including myriads of external projects into a single one only complicates build maintenance, increasing build times and portability to other platforms. To avoid this, I set myself the goal of using as few external dependencies as possible, even at the cost of reinventing many little wheels within a single huge consistent project. As reward, the entire framework takes only 3 minutes and a half to build in release mode, while other similar projects may take hours.

- Robustness. Everything in the framework has been programmed from scratch to guarantee robustness in the basic operations, like memory management, containers, data structures, parsing and serialization. The framework has been used to create other applications for years and the basic functionalities have been stress-tested with billions of iterations. The serialization format used to save data never crashes even if you replace bytes with salt and pepper noise.

- Consistency. The framework has been entirely coded in C++ with the less number of external libraries possible. Not even the Standard Template Library has been used, to prefer a custom implementation with an higher degree of control. A modular architecture has been used to avoid monoliths and to create plugins: multiple executables in the same project can share code with dynamic libraries. On Windows, the CRT have been included in the build because the software architecture allows it, so you don't have to install Microsoft redistributables to make it work, You can run even in a clean Windows installation without having to install anything else.

- Simplicity. Complexity exists only when something is done badly or is poorly done. The entire framework was created with the goal of being simple and making life easier for those who use it. Everything must be about simplicity, from programming to projects building, installation, application usage and configuration.

- High performance. All the algorithms and solutions have been designed for working with the highest performance, without having to rely on expensive hardware.

- Low resources. The applications created with the framework must work at best with the minimum requirements possible in terms of hardware, memory and CPU usage. What can be executed with the GPU must provide always a via-software alternative to run on GPU-less machines: this is important to reduce the costs if you are dealing with paid virtual machines in a cloud service.

- Scalability. The framework could be used to create a wide range of software, from the pacman clone to client/server applications, interposition libraries or games with unreal engine 5 graphics. It can build on a wide range of platforms with different requirements, from the oldest to the latest operating systems. For example, an application could start on Windows 7 but also on Windows 11, or maybe it may have a build for Raspberry PI or, even more extremely, for AmigaOS 4.1.

- Hard-work. The entire framework has been developed over the years by one person (me), making difficult choices that would only pay off after a huge amount of effort and without ever compromising or taking shortcuts. This has paid off a lot over time, and will probably be the key to success for the entire project in the future, given that the modern trend tends in the opposite direction.

- Passion. The framework is not born with the purpose of making money, building fame and popularity, but with the purpose of creating something beautiful for the pure pleasure of doing it. Programming is not seen as a mere tool to achieve a goal but as a pure form of art, without giving up the highest standards of modern computer science.

- No greed for money. The framework will also be used for commercial projects but not for the greed of making money. If it works well, otherwise so be it. No big company will ever be able to get their hands on this project to make it their playground, so it will always be true to its basic principles. This project will never use clickbait, advertizing or clever gimmicks to attract users' attention: if it gets noticed it will only be thanks to the quality of the results obtained.

TextureMind Framework – Insights #2 – Still in a floppy disk!

In the past, the framework was so small that could fit into a floppy disk, with an average application size of 250 KB. After 13 years, the frawework is way larger and it has some external dependency that makes things worse, but it's nothing compared to modern applications. Let me say that the average size is 8 MB along with 2D / 3D engines, Vulkan / Cairo wrappers and the entire GUI system. However, during my latest analysis I noticed that an application with the common library alone is only 450 KB, which is still capable of fitting into a floppy disk. Not bad, considering that today the total disk space required by an application can be larger than 100 MB or 1 GB. With the common library, you still have the full set of containers, networking, multi-threading, ipc, log files, compression and files management.

The core module contains all the code required to handle objects, containers of objects, plugins and serialization, and it's required by the other modules. An application with the core module has an average size of 3.5 MB. I think it can be improved with some optimizations. With some efforts, it can be reduced to 2 MB. From my experiments, I can produce easily a reduced version of the core library which still makes other module works, with an average application size of 600 KB. It would be beautiful for the framework to create a self-contained application with modern graphics and audio which is still under 1.44 MB (a double density floppy disk). I really like the idea. I think I will put some work into this direction, in particular to make demos and games in the future.

TextureMind Framework – Insights #1 – Dropping DevIL

I have a huge external dependency to support lot of image formats, like jpg, png, bmp, but also other weird stuff, like paint shop pro and doom textures. This dependency has been discontinued from 2018, it has lot of other external dependencies (most of them are obsolete as well), the API is old and the build system is tricky to maintain. The library has LGPL license, so I can only dynamically link the dll file. Recently, I tried DWorkSim on a fresh installation of Windows 10 Pro and it didn't work because DevIL had issues with the missing CRT files. To fix the issue, I should install the old visual studio redistributables or rebuild the dependency. I don't like it.

To facilitate things, I decided to drop the DevIL libraries for integrating built-in dependency-free image libraries in the framework, like stb from nothings.org, which is compact, functional, well proven and public domain. Of couse I won't have anymore support for lot of weird (and abandoned) image formats, but who cares. I will keep basic support for loading (and writing) bmp, png, tga, jpg, gif, pic, pgm, hdr in my picture module. Additionally, more image formats will be supported through external plugin modules with smaller and sustainable external dependencies, like turbo-jpeg, open-jpeg and libavif. This will facilitate alot the build process and the porting into other operating systems. I already implemented the image import/export part with stb and it works like a charm. Soon I will drop DevIL definitely, so the build will be lighter and it will cover more systems without drawbacks or additional stuff to install.

Lighter is better!

TextureMind Framework – Progress #25 – Porting on Linux

Finally I ported my entire framework on Linux. From now, all the applications that I will create for Windows will be supported for Linux too. It took a really great deal of work to achieve this. It was necessary to improve the management of external dependencies, do some builds, implement a meson-based build system for the frame and a build deployment system based on python scripts. I had to fix literally hundreds of thousands of errors and resolve several technical issues related to the differences between the two operating systems. The first build of just the common library filled a log of 3.5 million lines of errors.

I had to analyze the errors and fix them one by one. After the common library, I had to repeat the same work for all the other components, but fortunately the errors were already much fewer. I had to implement the missing parts for Linux, including all the window and event handling. I also had to refactor the whole part for font management and the way screens are presented in the window, especially the part in Vulkan. It was a lot of work, even if it took a little more than 2 weeks, I wrote more than 170 commits. Looking at the results on the video, I'd say it was definitely worth it. In the future, I will port my framework and all my works for other operating systems. The next I want to support is MacOS. I have also in my list: Raspberry PI 5, Windows on Arm, Android, iOS and Amiga OS 4.1.

TextureMind Framework – Progress #24 – Preliminary work for Remoting Protocol

Currently I'm working at the development of the TMD (TextureMind Desktop) remoting protocol. I want to reach a point where it will be possible to connect to an host machine as soon as possible. I took the chance to improve the framework architecture implementing an entire plugin system. Now it's possible to add functionalities through external modules. I added YUV conversion with I420, YV12, NV12 and NV21 pixel formats. I improved the image format so now it's possible to serialize multi-planes YUV images. I made all the rgba pixel formats endianness agnostic. I wrote an abstraction layer for video compression and I'm writing a plugin module with ffmpeg libraries to support h.264 and av1 compression. I already implemented the transport layer with support for TCP where it's possible to send messages between processes through the network with my serialization system. Now I have to implement the communication channels for the remote session, in particular display and input channels. I designed the entire architecture, where I think I made lot of improvements compared to classic remote protocols. There will be an abstraction layer to capture or control not only the desktop but also applications. Imagine to write an application for a virtual museum and you want to have multiple users connected into it: you can deliver the application in the form of SaaS. Then a channel may have two modes of communication: per client or broadcast. Just to make a valid example, the display channel is typically per client and the input channel is broadcast, but you can also extend the display channel for streaming in broadcast. Finally, I designed a smart adaptive frame rate control to avoid jittering. The first version of TMD will support only software video encoding / decoding, but future versions will be optimized for supporting the GPU, in particular NVIDIA with NvEnc and AMD with Amf.

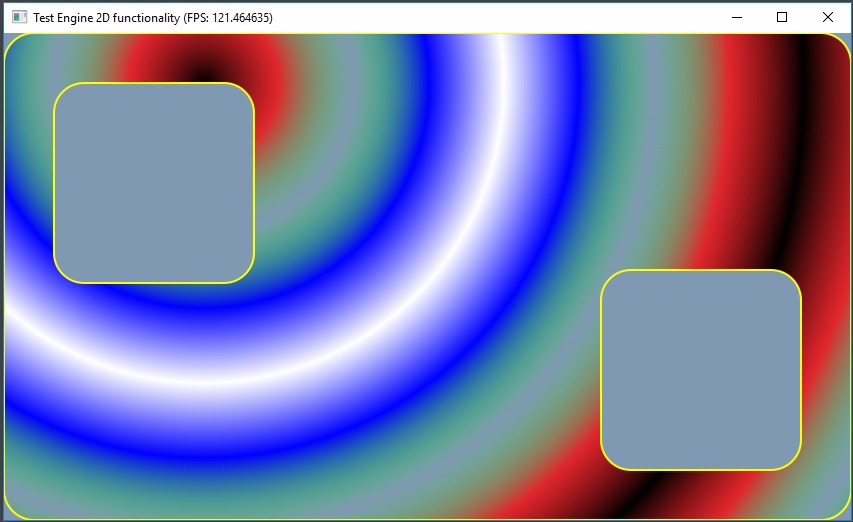

DWorkSim v0.2

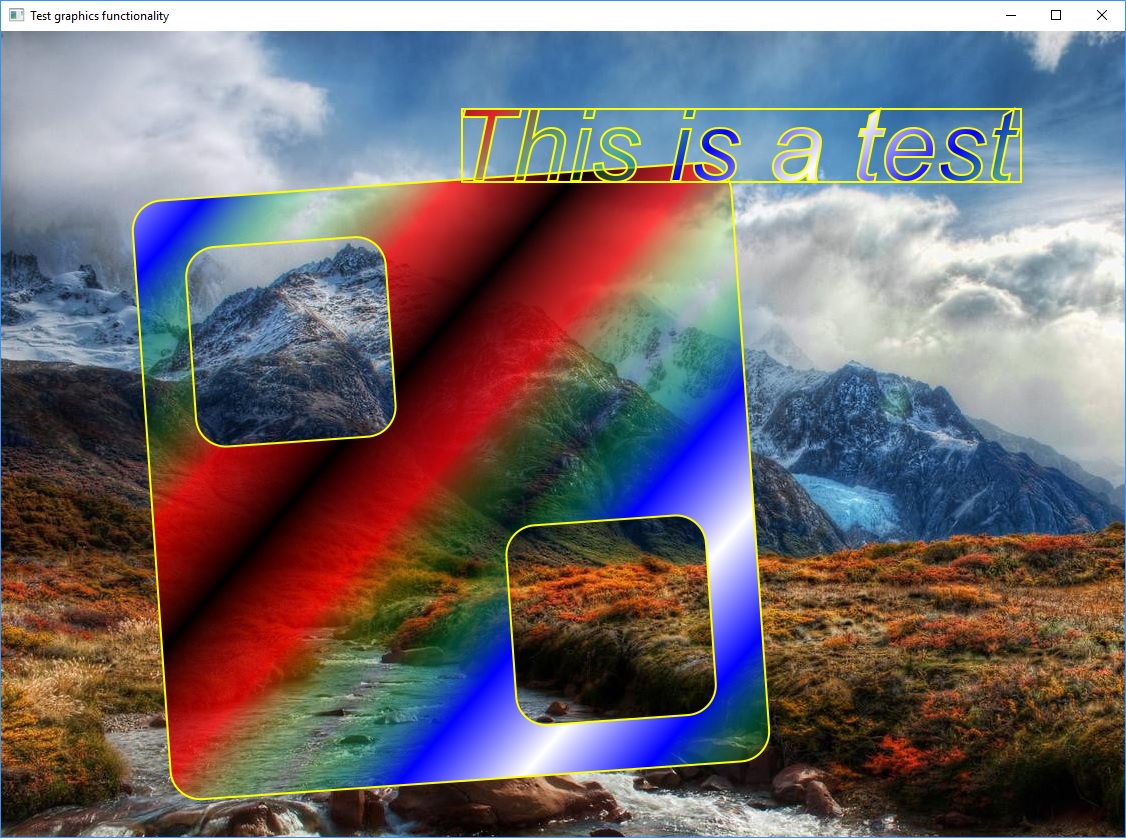

DWorkSim stands for Deterministic Workflow Simulator. It's a software created by me with my TextureMind Framework for testing image transmission in remoting protocols, like Microsoft Remote Desktop or AWS DCV. Frames are identified by the number in the top-left corner. Frames with the same number will have always the same pixels, even if they are generated in different moments, on different machines.

DWorkSim is a good alternative to raw images for instantaneous PSNR estimation during the transmission of the animation sequence. It's probably the only software in the world that allows you to do that, because the other softwares don't have support for frames generation and PSNR estimation. You can make also all the estimation you can make comparing two images, like image coherence, blurrines, pixel accuracy, color distortion and text readability. DWorkSim is now version 0.2. It can generate images to test GPU and CPU. The GPU test is different than the test for the CPU, for being more appropriate. The GPU test is performed with Vulkan libraries while the test with the CPU with the Cairo libraries. The GPU test contains two shaders taken from shadertoy:

- Full Spectrum Cyber: https://www.shadertoy.com/view/XcXXzS

- Where the River Goes: https://www.shadertoy.com/view/Xl2XRW

DWorkSim is Freeware for now, so you can use it for testing your software. For the download, visit:

DWorkSim – Deterministic Workflow Simulator

TextureMind Framework – Progress #23 – 2D geometries

I refactored all the geometry module to eliminate redundancy and to introduce new classes for advanced functionality. I re-designed my shape 2D model, for being lighter and more compatible with current standards. For example, now it's possible to create a 2D path which is compatible with SVG format. I implemented a simple parser of SVG path, so it's possible to pass directly the string to the path 2D object, then it will be converted to custom format.

In the picture above you can see an SVG path rendered with my 2D engine and Cairo libraries. I added also 2D geometric primitives, such as circles, ellipses, arcs, rounded rectangles and splines. These elements are now also part of the 2D engine, so they are automatically converted and drawn by the graphics device. I added also two component to the geometry module, which are ShapeGenerator and CollisionDetector. ShapeGenerator is a component to generate 2D shapes from few arguments and the operation between other shapes. It will be used to convert 2D paths to bezier curves, to lines or polygons, or to generate complex shapes from a math model. The CollisionDetector component will be used for collision detection between simple shapes, like points, lines, rectangles, circles and polygons. The next step is to extend these modules for the 3D world, implementing 3D geometries, collision detection and generation for lathe and extrusion objects.

TextureMind Desktop

TextureMind Desktop (TMD) is a software to allow remote access to a personal computer's desktop (the host machine) from a client device. It is composed by a server running on the host machine and a client application which connects to the server. The application exposes a simple user interface to make the user access and interact with the desktop of the host machine.

TextureMind Framework – Progress #22 – OpenAL Soft

In parallel with GUI Editor, I developed the audio part of my framework. I wrote an abstraction layer to handle the AudioContext and I created an implementation of it with OpenAL Soft libraries (but it can be implemented with any other audio library). I created also an abstraction layer to handle audio tracks and sounds. Now it's possible to load a sample from ogg vorbis format and play it with the audio context. The context can play many sounds at the same time, handling the queue of sounds in flight. A sound can be consumed until the execution is terminated or played in a loop and stopped later. The audio context automatically manages the audio that are playing on the background and the others that are about to terminate. It's possible to group more sounds under the same slot to manage master volume, frequency and other parameters. For example, in a video game it would be useful to play sound effects in a slot and the music track in another slot, with a separate volume. The conversion between audio models is managed automatically. You can load a dolby surround 7.1 audio track and play it in a stereo system, and vice versa. Finally, you can also play sounds in a virtual reality, where the listener is positioned in the 3D space. The next step will be to add DSP real-time effects and to load / play music from mod file format.

Two big announcements

My paternity leave is about to finish. I took advantage from this period of pause to continue TextureMind Framework, in my spare time (programming is my lifelong passion). I wrote about 800 commits and finished most of the parts that were incomplete. I refined the resources system, supporting dynamic resources and content based optimizations. I finalized binary serialization and file formats. I made it 100% resilient to errors and corruption, so it can pass any fuzz test or existing app sec criteria. I supported system clipboard, and now it's possible to copy & paste anything between processes: text, images, audio tracks, 3D models, anything. I implemented a complete audio engine with OpenAL Soft. I completed the GUI system and created a professional GUI Editor that is almost finished. I started to support networking, so it's already possible to implement client / server applications, exchaging messages between processes with all the complexity of my serialization system, with my cross platform backward / forward compatible binary formats. Now it's getting serious.

I have two big announcements. The first is that 2024 will be my last year of work at NICE / AWS as developer of NICE DCV software. I will no longer be able to work for Amazon because according to company logic (Return To Office) it's not possible to develop remotely and I cannot move from my city. For now, I'm in a sort of "grace time" period, but soon it won't be possible to work anymore for the company and I will leave, I think for the end of the year. The second announcement is that when I'll leave my job, I will dedicate myself to TextureMind activity and I will make it my real job. Many applications and projects will be done from this point onward, it will be the beginning of a new era. Among the many things I intend to do, I am working on my own desktop remotization protocol, obviously totally original and reprogrammed from scratch with my TextureMind Framework. It will be called TMD (TextureMind Desktop). I have many years of experience as an employee developer of this type of software and I am perfectly capable of creating my own software starting from scratch. The software will not only be a desktop remotization protocol, but also the virtualization protocol for my applications because I want to release some of them in the form of saas (software as a service). Everything will be developed in C++ with optimizations, high performance and the minimum amount of external dependencies. It will be great. Soon I will publish some video to show my latest progresses.

If you are interested in my projects and want to start supporting my future business, you can make a donation to:

https://www.texturemind.com/donate/

Thanks.

300 commits in 1 month!

I'm closing the month of April with 300 commits, everything in just 1 month. I wrote most of the code for the Gui Editor application and all the missing audio part, with support for dolby surround and 3D environment.

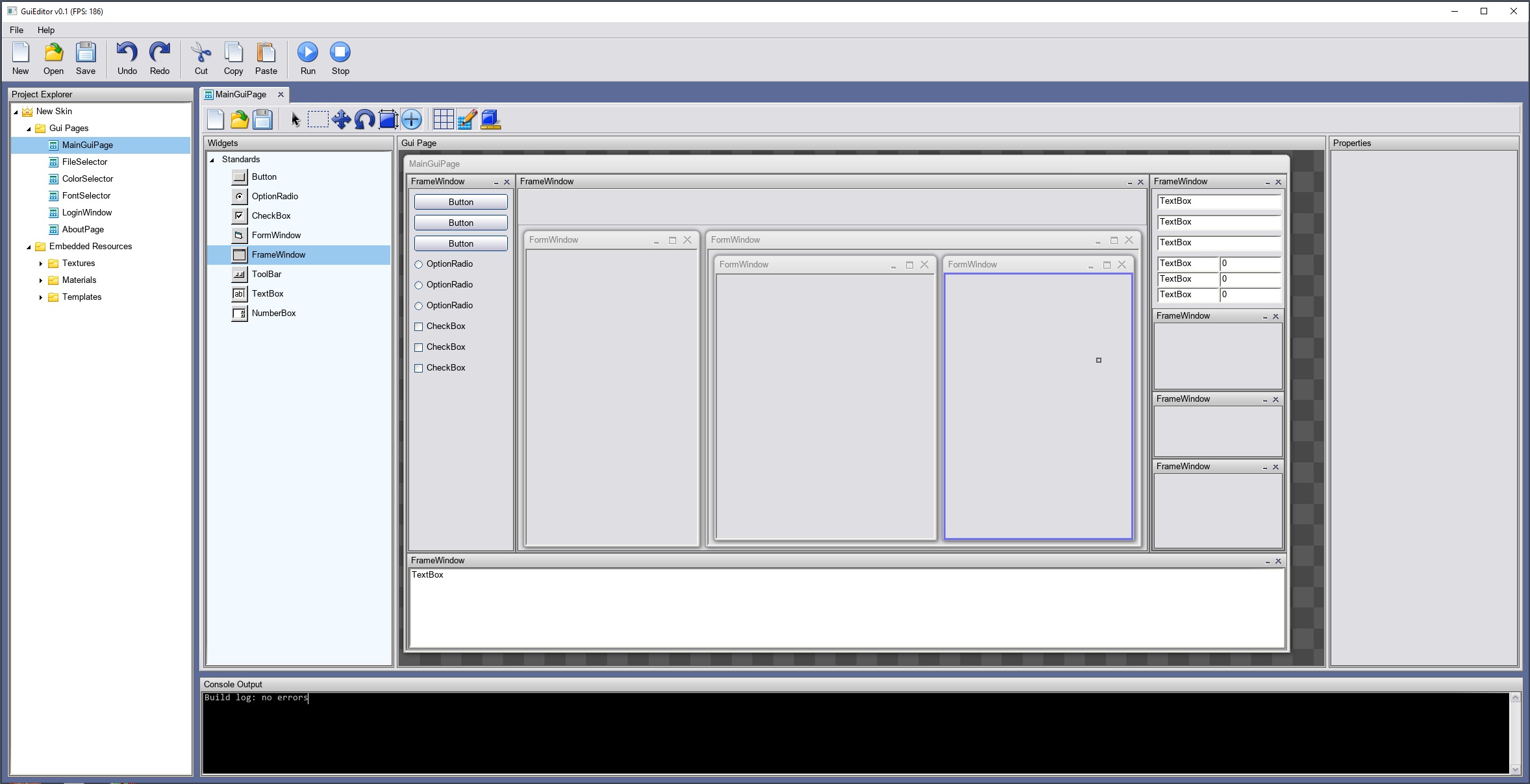

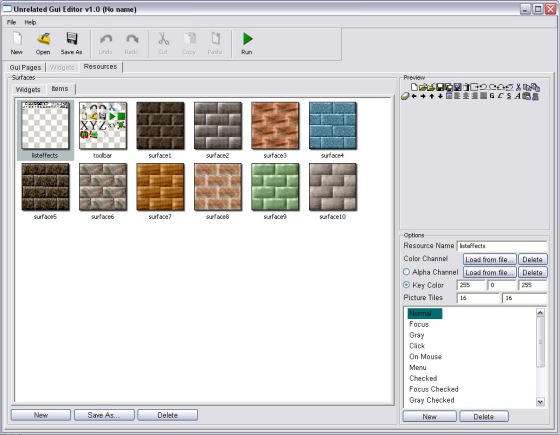

TextureMind Framework – Progress #21 – Gui Editor

When the application will be complete, it will be forked internally to create another application called "IdealMind". With IdealMind, it will be possible to manage resources, create 2D and 3D scenes and design new applications with a programming language. The main target is to create a development environment for quick and easy prototyping of new applications, without sacrificing power and stability.

OutVideoWork – Work in progress

This application is designed for creating professional visual effects and for non-linear video montage. It will make use of ffmpeg library for the video part and my framework for graphics part, in particular the chance of programming effects with GPU shaders, creating animations with the advanced animation system or ray-tracing rendering.

ShaderMind – Work in progress

ShaderMind will be designed for the automatic creation of shaders compatible with shadertoy and other environment. It will be possible to copy & paste shaders from shadertoy (or others) and see them work on ShaderMind application in no time, so the new shaders can be saved for the future and re-used for other projects. In the same way, you can design new shaders and export them to shadertoy without additional efforts. The shaders will be designed with a 3D modeller editor, where the scene is previewed with my engine and my material system: when finished, the entire scene can be converted into one or more program shaders compatible with shadertoy. The resources can be converted into the glsl source code to include in the shader program, with some limitation. For example, a small texture or a small 3D object can be converted into source code but not large textures or huge 3D models. The scene will be composed basically by signed distance functions rendered with a simplified material system. Complex resources like bezier outlines for 2D or 3D text rendering will be converted into source code as well.

MultiEdge Paint – Work in progress

MultiEdge Paint will be a professional software to paint artistic images and for seamless textures generation. It will make use of generative AI to create new images or modify existing images, with the latest features like txt-to-img, inpainting and outpainting, with the chance to setup the environment locally (not a remote service, it will require a powerful GPU) and download the checkpoints directly from civitai.com . It is called "multi-edge" to resemble the horizontal, vertical and temporal loop, but basically it can loop in multiple "edges" of the images, handling multiple layers and maps at the same time.

IdealMind – Work in progress

Originally TextureMind Framework IDE, IdealMind is designed to manage resources (like textures, materials, 3D models) and to create applications with TextureMind Framework's functionalities. The term "Ideal" stands for: Integrated Development Environment Application Level, but it's also nice to associate it with the word "Mind", which is part of the TextureMind logo.

With IdealMind, it will be possible to create any kind of application, from a simple window with a rectangle inside of it to an entire 3D game. You can create new projects, add resources like textures, materials, audio, design new graphics interfaces, handle input events, render 3D models, execute scripts and so on. It can be used also to create tests and to handle graphics workflows.

TextureMind Framework – Progress #20 – GUI is complete!

It's done! Taking advantage of the extra time I have now, I finished the GUI associated with my TextureMind framework. Compared to the previous update, I added Frame Windows, Tree Views, Tab Strips, Tool Bars and Docking Layouts. I fixed lot of bugs, improved the management of resources and events, optimized the algorithms to draw on screen and so on. One of the big improvements is in the menagement of resources. I introduced the concept of resources package. A project and its internal components may have a resources package associated on them which contains materials, textures and templates. The resources in the project is considered the main package of resources, but a single component may store an independent package of resources used for rendering itself. When the component is exported, it may save internally only the resources used for its rendering. When the component is imported, the internal resources are merged in the resources of the main project: if the GUIDs are the same, the component's resoures are recycled, otherwise the component's resources are added into the main project.

I implemented also a totally dynamic model for the loading and initialization of the resources. Previously, all the resources were loaded, stored in memory and converted into GPU memory at once. Now, only the resources used for the rendering between a number of consecutive frames is loaded, stored and converted. Additionally, you can specify a number of preloaded resources associated to a component: the resources will be kept alive only for lifespan of the component. In this way, it's virtually possible to explore terabytes of data stored somewhere, as it is in modern engines like Unreal Engine, or optimize for hardwares where the RAM is limited. Now I started to work on the GUI editor, which is at good point of development as well. The GUI editor will be used to design new interfaces and also to create new skins, with any kind of appearance. The entire GUI system is designed for applications and video games, so it must be robust for a wide range of use cases.

DWorkSim – Deterministic Workflow Simulator

This application was created with the aim of generating an animated scene where the frames are always the same. Each frame is associated with a number, so it is possible to capture individual frames and compare them, to see if there are any differences if the frames were manipulated by a second application, such as remote display protocol. Furthermore, the workflow is generated without excessive computational cost, so it can be used to calculate the performance of the remote display protocol, without excessive impact on the entire system. This software is freeware, so you can use it for free, even for commercial products (see that you cannot distribute this software, sell it, change author's name or modify the content: read the EULA document in the zip file for more information).

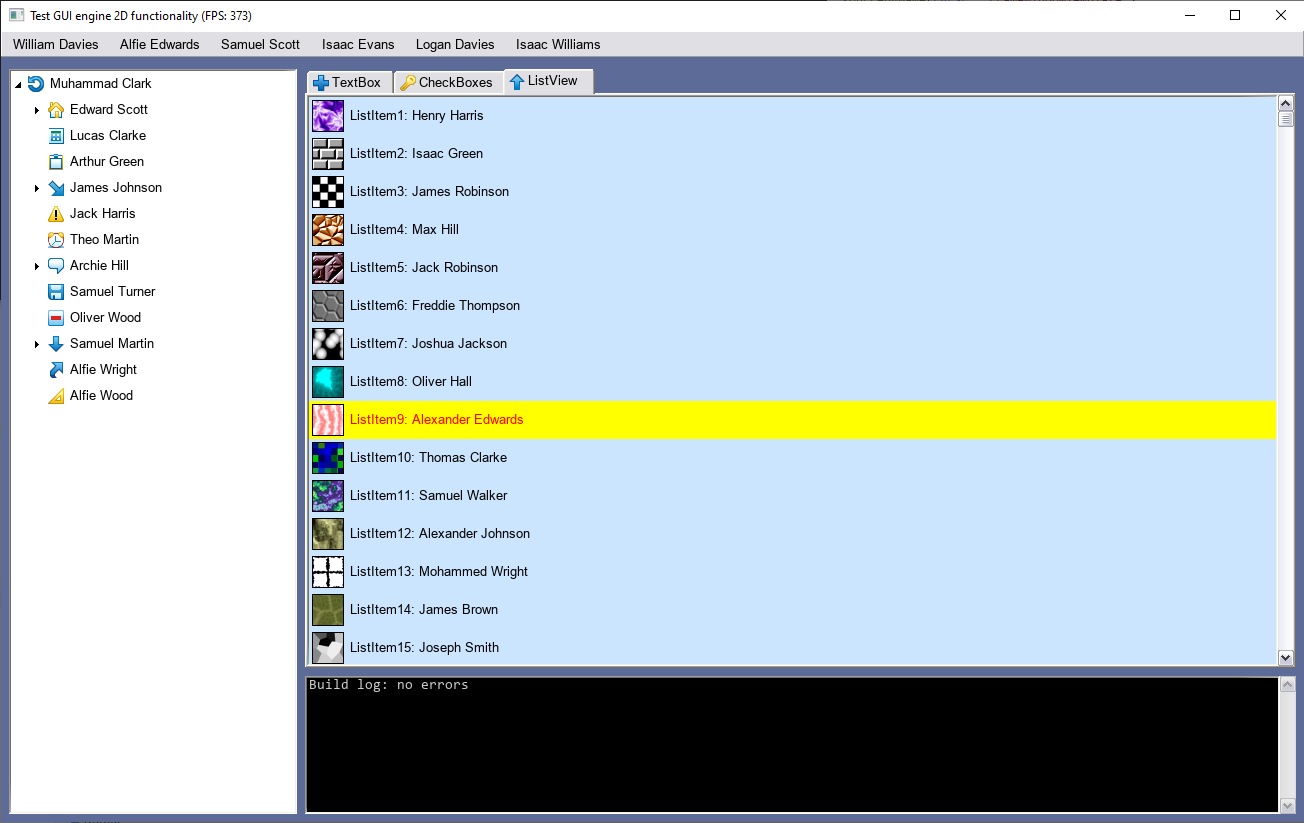

TextureMind Framework – Progress #19 – ListViews, Menus and ComboBoxes

I recently focused my efforts on the development of some widgets for displaying lists of items, in particular: listviews, menus and combo boxes. Listviews are optimized to handle hundreds of thousands of items without causing the application to slow down too much. Listviews can be configured to draw colors corresponding to different events, such as when the mouse passes over an item, when it's clicked, selected or out of focus. It is possible to select a single item or a range of items by holding down the SHIFT key, or add or subtract a single item by holding down the CTRL key.

When one or more items are selected, an event is generated by a callback system. Another interesting innovation concerns the menus. I used listviews to implement an entire drop-down menu system with a rather classic setup. Menu boxes can be opened and closed in real time. When an item is clicked, it is then reported to the application via the same callback system used for the listviews. It should be remembered that this GUI is drawn inside the screen, so these widgets will never come out of the application window but they have to adapt dynamically to best fit the available space. After implementing the menu it was possible to implement combobox widgets as well. Once you click on the combobox, a drop-down menu will open and the click will change the selected item within the box, generating an event. As for the menus, also in this case the drop-down menus will best occupy the space available on the screen during the window resize. The positioning of the drop-down menu will also take into account any geometric transformation applied to the widget that is opening the menu, repositioning it in the best way.

So I can say that the GUI is almost finished. There are a couple of missing widgets but they are not so complicated to implement. I don't think I will create other videos about GUI updates because I will focus on other features. When completed, this GUI will be used to create application and video games. The next step is to implement an editor to create GUI pages that can be used inside the applications.

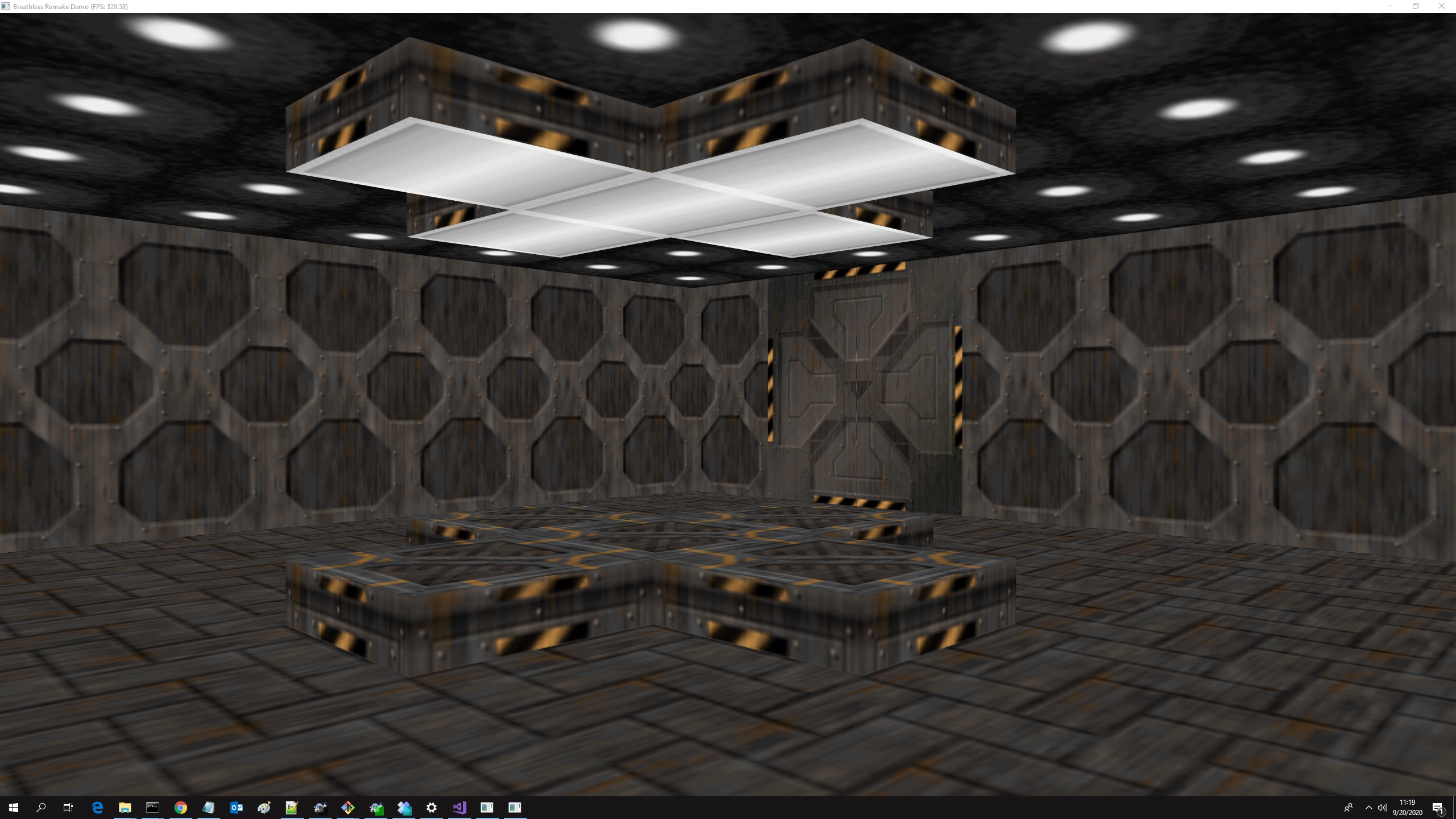

PC Breathless Demo #1

I'm glad to release the first demo of PC Breathless that you can try on your own PC desktop or laptop. The minimum requirements are Windows, GPU card and Vulkan libraries installed.

You can turn around your view with the mouse and set forward with the up/down cursors, eventually go up and down with the left / right mouse buttons.

This demo is not a great evolution of the demo already published on youtube. The big difference is in the clean up and the fact that you can safely run in your pc without issues (be sure to have vulkan libraries installed). If you like this project and you want that it will be continued in the future, please make a donation to support my work. It will be appreciated.

This demo is not a great evolution of the demo already published on youtube. The big difference is in the clean up and the fact that you can safely run in your pc without issues (be sure to have vulkan libraries installed). If you like this project and you want that it will be continued in the future, please make a donation to support my work. It will be appreciated.

For now, you can see the third stage with no collision detection applied. In the future demos, I will apply an actual walk with collision detection, and the missing code to open the doors. As you can see, the graphics aspect is very simple without any lights applied, sky, characters or animated textures. That's because the 3D engine is still under development. As next step, I will introduce a new lighting system with PBR. I do not exclude that I will implement also a ray-tracing renderer in the future.

Old framework’s GUI

This demo shows up the GUI system of my old project "Unrelated Framework". This GUI has been programmed from scratch and makes use of both via software and GPU rendering. In this case, the demo makes use of OpenGL for the rendering. The GUI implements the most important widgets, like buttons, text boxes, form windows, tab strips, list views and menus. In this demo, you can find a some window in a desktop background, with tabs and text boxes. You can minimize and maximize the windows, click on the option buttons, write some text and test the popup menu. The rotating images belong to deferred contexts which are drawn into drawables that are presented inside the GUI's windows.

Currently, I'm re-implementing all the GUI into my new project "TextureMind Framework", which is more advanced and accurate than in the past. For instance, in this demo the text is drawn with bitmap fonts precalculated inside one or more textures, while in the modern TextureMind framework all the fonts are automatically generated by the engine. In particular, the new framework can use both bitmap and outline fonts, if supported by the graphics device (currently, I'm working to implement path rendering and outline fonts in Vulkan). Another difference with the past is the material system: in the past, everything was made to be rendered with simple image patterns, while in the modern engine I have implemented a full and complex material system made by visual expression nodes and program shaders (where available). However, the old demo has been programmed 15 years ago, which is amazing considering the complessity of the features that I'm still trying to port in the modern framework.

Frame rate adaptation with linear interpolation

When you create a game, you need to consider the target framerate of your application: it will affect the entire dynamic of the physics engine and all the choices that will make the game playable. However, given the big heterogeneity of modern graphics cards with different types of monitors, the target frame rate may not be available on all configurations. It's therefore necessary that the target frame rate is scaled adequately to make the graphics equally fluid and to not affect the gameplay dynamics.

The demo presents one of the existing solutions for solving this type of problem, based on the frame adaptation with linear interpolation for the trajectory correction. In practice, the application frame rate is the vsync frame rate, while the target frame rate is used to calculate deltaT. A linear interpolation of the trajectories is used to bridge the gap between the target frame rate and the vsync frame rate, generating frames that do not exist to reach the vsync frame rate. As consequence, the F1 option is smoother than the F2 option.

Tetris solver V1.0

If you like tetris, you are going to enjoy this demo. Basically, it is a bot to solve tetris that I programmed many years ago. In this case, the only technique considered by the AI to put pieces into the line is the "hard drop", so horizonal movers and T.spin are not supported.

You can interact with the interface and decide to change the decision. If you keep the "space" key pressed, the AI will solve the tetris in your place, trying to not be defeated by the level complexity. You can also have fun trying to complicate the scenario, the AI will try to solve the level in the best way possible. This demo exists because in the past I wanted to implement a bot (like GemFinder) to automatically solve tetris games. Then, I was occupied with my current job and I abandoned it.

Mandelbrot navigator

Old isometric engine

This is an old demo that I created in the past during the development of an isometric engine for a PC porting of the snes game Equinox. The project was commissioned to me by a person that wanted to port this particular game to the pc world and then he abandoned it for lack of money. The graphics look very similar to the snes title, I remember that I did a pretty good job with the porting.

This demo was also part of an old framework to create video games that I developed in the past. The engine doesn't make use of 3D rendering or GPU, it's entirely via software. There was an original idea implemented to handle isometric occlusion with the simple blit algorithm, without implementing any variant (infact, the algorithm could work with simple BitBlt function call). As you can see, you can play only two rooms with collision detection, you can run, jump and hit enemies. Even if you have only two rooms, the engine was capable to handle the entire game. It's a shame that the project has been abandoned.

TextureMind Framework – Progress #18 – AnsiC Parser

A new AnsiC parser was born inside TextureMind Framework. I'm programming this parser from scratch, starting to implement all the standard ISO C89 features. Most importantly, the parser is designed for being extended to any other language, formal and not formal, which is the main reason why I didn't use another existing and well proven parser, like CLang. Another reason is that the parser is designed for being light-weight and anchored to the framework's internal architecture.

I implemented all the main features and now the parser is able to parse a huge variety of AnsiC source codes. I was able to parse also the donut source code and to get the AST from it:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | k;double sin() ,cos();main(){float A= 0,B=0,i,j,z[1760];char b[ 1760];printf("\x1b[2J");for(;; ){memset(b,32,1760);memset(z,0,7040) ;for(j=0;6.28>j;j+=0.07)for(i=0;6.28 >i;i+=0.02){float c=sin(i),d=cos(j),e= sin(A),f=sin(j),g=cos(A),h=d+2,D=1/(c* h*e+f*g+5),l=cos (i),m=cos(B),n=s\in(B),t=c*h*g-f* e;int x=40+30*D*(l*h*m-t*n),y= 12+15*D*(l*h*n+t*m),o=x+80*y, N=8*((f*e-c*d*g )*m-c*d*e-f*g-l *d*n);if(22>y&& y>0&&x>0&&80>x&&D>z[o]){z[o]=D;;;b[o]= ".,-~:;=!*#$@"[N>0?N:0];}}/*#****!!-*/ printf("\x1b[H");for(k=0;1761>k;k++) putchar(k%80?b[k]:10);A+=0.04;B+= 0.02;}}/*****####*******!!=;:~ ~::==!!!**********!!!==::- .,~~;;;========;;;:~-. ..,--------,*/ |

Pretty cool, isn't it? The source code has the shape of a donut and it can generate a rotating 3D donut in ascii characters. As you can see, it's not a conventional source code, it's packed and full of tricks to reduce the space, so it was a perfect test for my new parser. I was suprised to see that the parser was actually capable of parsing it, in a time of about 1.37 ms. That would be very cool to execute it now! I have in my plans to write an AST interpreter to manipulate the source code, to translate it into another language and to execute it. The AST structure can be used as well to feed a virtual machine with instructions (like LLVM) or for another compiler backend, like TinyC. The main goal is to use this parser for writing applications with a full access to the framework's functionalities.

Another goal is to implement a GLSL parser and manipulate the source code to integrate program shaders into my materials' system. It will be possible to copy & paste shaders from shadertoy into material nodes, to create super complex materials for 3D rendering.

Future plans for TextureMind Framework and satellite projects

I'm very excited to announce all the future plans for TextureMind Framework. First of all, let me tell you that I'm focusing all my energy on the development of the framework. I continued to develop for months now, without stopping. I'm trying to keep it consistent and reach important targets in no time. In the previous months, I refactored the entire system of class interfaces, serialization, graphics devices and the graphics user interface, implementing new widgets.

New language Parser

Now that everything looks perfect, I'm focusing my efforts on another important feature: a brand new parser for programming languages. The parser will be capable of parsing a source code with given rules and produce an abstract syntax tree (AST). The AST will be used for many purposes. For instance, there will be the chance to give the AST to a writer and produce again the source code, as well as you can give the AST to an executor component and execute the instructions at runtime. The AST will be used also to feed an executor (like LLVM) and execute the instructions at just-in-time.

GPU / CPU shaders

The AST will be used also to cross compile GLSL shader from shadertoy and make it compatible with my internal material system. In this way, you can copy & paste shaders from shadertoy and make them work directly on my framework without any further readaptation. Multiple GLSL shaders will be merged into a single GLSL / HLSL / SPIR-V shader without the need of ping-pong rendering: buffers will be converted into material nodes and channels will be converted into connections between material nodes. This is one of the most outstanding and absurd features I'm working on. Another feature is the possibility to execute shaders without the GPU, converting GLSL into Ansi C code executed by the CPU at just-in-time with multiple threads running in parallel in the same thread-pool.

TextureMind Framework – Progress #17 – GUI – Advanced TextBoxes

I've continued with the implementation of the framework's proprietary GUI. In this case, I implemented a new widget to handle textboxes. As you can see in the video, this is not just a text box widget to put some text into it, but a complete text editor that can be used for any purposes, from the simple notepad to programming languages.

All the main features are implemented, like unicode support, text editing, scroll-bars, multiline selection and clipboard cut & paste. The aspect of the text box can be personalized, with a background texture and different colors. You can also rotate and apply complex geometric transformation to the window. All the GUI in this video is rendered with Vulkan libraries, but it can also be rendered with the Cairo libraries.

TextureMind Framework – Progress #16 – Modules and object interfaces

After years without updates I'm glad to present probably the most important update so far. In the past, the framework was just a monolith of C++ static libraries that could be enterely or partially included within a project to access various functionalities. On one hand it was good, because it simplified the programming of the framework in its parts, on the other it started to become a problem in terms of modularity and scalability. One of the worst complications was caused by the redundancy of the static library binaries: for example, if I had to create a plugin system, each dynamic library would have to include all the binaries with the functions to manage the various components of the framework.

Future plans for product and projects

I wanted to give you a short update about future plans for the projects related to this web-site.

TextureMind Framework

Currently I'm working to a very long refactor of the framework to improve the internal architecture and fix lot of issues. After that, I will finalize the GUI and extend the support to other operating systems besides Windows, like Linux and MacOS. I will add support for Direct3D and OpenGL, while the via-software device will be extended to Skia library for advanced functionality, like 16-bit per channel. I will improve the management of system fonts at application level, add support for pre-calculated bitmap fonts and path-rendering for GPU devices. I will also improve the 3D engine with PBR (Physically Based Rendering) and real-time raytracing for GeForce RTX series.

Unlimited Holter ECG

There will be efforts to create a device that can record an ECG with 7 leads for days or weeks and that can be used as an external loop recorder. This is absolutely experimental and it will require months to reach a stable version. For now, I was able to monitor my heart activity in real time and record an ECG track. I'm working to implement the loop recorder feature, the holter monitor and all the GUI to handle it from the electronic device. Then I will develop a software for mobile devices (Android / iPhone) to download the ECG records, analyze them and produce valid reports. The software will be available from the respective online playstore. After that, I will design a PCB to move all the electronics component from the bread board to a compact space, then I will design the plastic enclosure.

Unlimited Holter

Today it is difficult to find a device on the market that can be used to intercept cardiac arrhythmias, like ventricular tachycardia, that may occur infrequently but can pose a serious danger. Usually these products record for 60 seconds or at most 5 minutes, or even if they record for 24 hours, they are very expensive and difficult to use.

There are cases where there are no medical indications for implanting a loop recorder and there are no products that can replace it. People often purchase devices with metallic electrodes and poor signal quality, they cannot register arrhythmias as they occur, they are difficult to use, and they cannot function while the battery is charging: in practice they are totally useless for this kind of purpose. At present, there is not a single product on the market at an affordable price that can provide this kind of use.

The aim is to create a device that can be used as an holter and external loop recorder, with the possibility of recording even for months for catching difficult and infrequent arrhythmias like sustained or non-sustained, monomorphic or polymorphic ventricular tachycardia, ventricular fibrillation, supraventricular tachycardia, AV-Block, Atrial fibrillation and so on. In the video, you can see the first working prototype with 7-leads. As soon as possible, it will be integrated into a PCB for reducing the size. In the future, it will be available in the gift shop so you can have it.

Great news!

Finally I decided to clean up this web site and take a clear decision about it. This website won't be used anymore to publish tutorials, reviews, knowledge, thoughs, articles and so on, but only "projects and products" related to the web site's activity. I removed all the useless categories and simplified alot.

Now you can register an account into this web site, login and leave comments. You can donate to support projects and products on this web site. The software will be really fantastic, so stay tuned because you will see some good ones. Bye!

TextureMind Framework – Vulkan renderer showcase

I created a video to show the potential of the Vulkan renderer included in my framework. Now the engine can import 3D models from several 3D formats (including collada) and render them with Vulkan libraries.

The engine is equipped with a proprietary material system and mesh format. The materials of the imported models are converted to the engine's materials format which is then converted into the shaders that are used to make them work. As you can see from the video, the engine is already capable of rendering millions of triangles at high framerate and resolution (3840x2160).

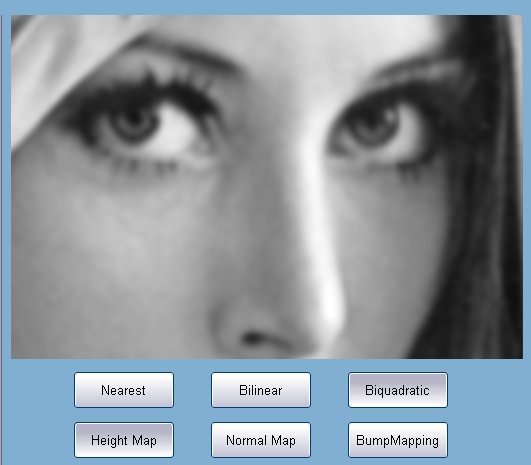

TextureMind Framework – Progress #15 – Vulkan – Bitmap Text Rendering

Now the engine in Vulkan can draw text with bitmap rendering. The engine checks which characters are on the screen and it creates dynamically the textures only for the fonts to be drawn, with the correct size and aspect.

The characters are precalculated into bitmap with the FreeType library and loaded into Vulkan textures only if needed. When the text is not rendered, the font is deallocated to reserve space for other resources. In this way, fonts can be drawn as normal polygons with textures, without an important impact on performance. The algorithm for text rendering is capable of drawing text with different indentation formats, including the "justified" one that you see in this video, like in any other word processor. The GUI is drawn with the GPU and it can be used for video games or 3D applications which require advanced performance and functionality.

Amiga Breathless for PC

This project is about the porting of Amiga Breathless for PC with modern GPU hardware. If you like this project and you want that it will be continued in the future, please make a donation to support my work. It will be appreciated.

Downloads

Demo #2

After 4 years (!), I've found the time to release the second demo of Breathless, which is basically a rebuild with the latest version of TextureMind version. There are lot of improvements, the most relevant ones are the support for Linux (any distro from glibc 2.17 onwards) and AMD graphics cards (it should work on Intel series too, but it's untested). Another improvements concern program shaders, now the lava is rendered with a program shader bound in the material, instead of the original texture. Hopefully, the next demo release shouldn't take so long :-)

Windows x86_64 (portable):

Download (5.7 MB)

Linux x86_64 / glibc 2.17 (portable):

Download (10.4 MB)

Demo #1

This is the first demo of Breathless for PC. In this demo, you can turn around your view with the mouse and set forward with the up/down cursors, eventually go up and down with the left / right mouse buttons. You can fly around the third stage with no collision detection applied. In the future demos, I will implement an actual walk with collision detection, and the missing code to open the doors.

As you can see, the graphics aspect is very simple without any lights applied, sky, characters or animated textures. That's because the 3D engine is still under development. As next step, I will introduce a new lighting system with PBR. I do not exclude that I will implement also a ray-tracing renderer in the future.

Background

The remake of Breathless was an old dream of when I was 14, along other titles like Gloom, Alien Breed 3D, Super Stardust, Mario 64 and so on. This work is part of another bigger project that is called TextureMind Framework, developed to facilitate the creation of these kind of projects, like games, demos, presentations and so on. The idea to make a remake of Breathless in this moment started when I saw that the 3D engine with Vulkan was stable enough to render any kind of map imported with AssImp library. So why don't import Breathless map and use the same 3D engine to render them?

I was amazed by the idea of rendering Amiga Breathless with Vulkan libraries. The biggest obstacle was to import the maps from the old format in GLD, that was more suitable for raycasting than polygonal rendering. I started downloading all the material from Aminet, with this link:

http://aminet.net/package/game/shoot/Breathless-1996-Source

Then I studied the format from the original source code in C, in particular the map editor. The first important obstacle was that the editor was programmed for loading the map only in uncompressed format and all the maps were compressed in an unknown format called SLZ. I got 0 results on Google. I didn't know the format and I was about to abandon the project.

TextureMind Framework – Progress #14 – Vulkan – Skinned mesh

Skinned mesh rendering is a fundamental part of every modern 3D engine, so I couldn't avoid to implement it. The skinned mesh with weights, indices, bones, skeleton, and animated nodes is imported with AssImp library into my format. I added weights and indices to the vertex attributes while bones matrices are written into a shader storage object. The skinned mesh is computed with the GPU, by the vertex shader.

In the video you can see the final result of the implementation. The model has been imported from Doom 3 format into my format, then animated and rendered by the 3D engine. For now, the keys with quaternions are interpolated with a slerp for every frames. An optimization can be to pre-calculate all the bones into a SSBO at fixed frame rate (like 60 fps) and use it to render a massive amount of meshes.

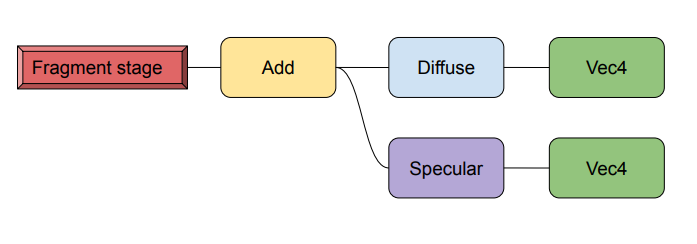

TextureMind Framework – Progress #13 – Vulkan – Advanced materials

I improved the material system, introducing the lighting stage. I removed the fragment stage and replaced it with color and lighting stage. The output color is calculated as the sum of the color stage and the lighting stage. The color stage has only one material node as input that is used to produce the output color for this stage.

The lighting stage wants more inputs, like ambient, diffuse (or albedo), specular, roughness, metalness, that are mixed in a physical based rendering (PBR). Each input is connected to one material node that can be the result of the operation between more material nodes, so every stage can have it's own textures or the math operation between more textures, uniforms and constants. In the video you can see a model with advanced materials imported to show the benefits of the last optimizations. In this case, the ambient stage is rendered correctly and mixed with the diffuse textures.

TextureMind Framework – Progress #12 – Vulkan – Import materials and normal maps

Now the importer with AssImp library is capable to import model materials and textures into my format. I added also support for normal maps with tangent and bitangent vertex attributes, improving the lighting stage in the fragment shader to render it properly.

In the video you can see nano suit model imported from collada format. As the object rotates, you can see the benefits of bump mapping and specular textures.

TextureMind Framework – Progress #11 – Vulkan – Import 3D model

I decided to use AssImp library to import models from other formats to my 3D mesh format. The video shows a first implementation of the importer.

Vertices and normals are converted along the skeleton structure, while the red material is generated just to render the model on the screen. The next step is to load the materials and the associated textures.

TextureMind Framework – Progress #10 – Vulkan – Materials and 3D rendering

Finally, the very first 3D model rendered by the 3D engine. Even if it looks like a simple torus demo, the main feature this time is the format used for the 3D mesh and the convertion from material nodes to vulkan shader, for the rendering.

The mesh is composed by a polygon hull, a set of vertex attributes and a layout that defines the nature of vertex attributes. The polygon hull represents the geometric structure of the mesh while vertex attributes define the graphics and the physical aspect. A mesh can have virtually any number of vertex attributes, that can be: position, normal, colors, texcoords and other new attributes used by the material.

Materials are composed by expression nodes, then converted to shaders in a second step. Every material has a layout with the number of vertex attributes required for the rendering. The material structure used to render this model is the following:

The layout of the mesh doesn't have to match exactly with the material's one: if the mesh has the required vertex attribute then it's used, otherwise 0 values are used instead. It's for the material to decide how to use the vertex attributes offered by the mesh. In this way, a single material can be used to render any kind of mesh. Of course, a mesh without normals cannot render diffuse or specular, or without texcoords cannot render textures, normal maps and so on.

The layout of the mesh doesn't have to match exactly with the material's one: if the mesh has the required vertex attribute then it's used, otherwise 0 values are used instead. It's for the material to decide how to use the vertex attributes offered by the mesh. In this way, a single material can be used to render any kind of mesh. Of course, a mesh without normals cannot render diffuse or specular, or without texcoords cannot render textures, normal maps and so on.

Uniform buffers can be used by a single mesh to change the material content, like colors or texture coords. For instance, the color of diffuse in this material can be connected to a uniform contained by a 3D mesh, that can be changed on the fly, changing the color of the object. In this way, it's possible to reuse the same materials for multiple objects, even with different aspects, like particles or game characters.

TextureMind Framework – Progress #9 – Vulkan – Materials and textures

I improved the implementation of materials and textures with Vulkan. Now every material is translated into a GLSL shader that is converted into SPIRV code with shaderc library. The shader is generated along the graphics pipeline to match the material settings. For now materials are very simple and used to draw an image texture with alpha blending or a filled color.

As you can see from the video, now the gui has normal appearence instead of rainbow rectangles of before. The next step is to support path rendering and font rendering, for drawing the text. In the future, the same materials system will be used to draw 3D content too.

TextureMind Framework – Progress #8 – Graphics context and 2D GUI with Vulkan

I am happy to announce that Vulkan library has finally been integrated into my framework. For the moment nothing complicated, I limited myself to implement a specialization of the Graphics Context that draws simple colored rectangles instead of the images drawn by Cairo library. It's possible to invoke drawing commands with the same degree of complexity and practically identical management of textures, materials and uniforms, at programming interface level.

Each rectangle is associated with a transformation matrix, which is translated into a uniform buffer. It's also possible to rationalize the rendering into multiple layers, allowing the reuse of command buffers with a minimum programming effort.

As you can see from the above image, the 2D GUI based on the graphics context worked quite well. It's possible to drag the windows and see them move on the screen at a high framerate, which is the main purpose for which it's worth bothering the Vulkan libraries.

For the moment there is an implementation of textures and materials, but I have not yet finished the rendering part at shader level. The difficulty lies in the fact that the framework must resolve the material nodes to extract the proper GLSL shader to be converted into SPIRV, create a suitable graphics pipeline and set it before rendering. The next step is to finish this part and make the 2D GUI identical to Cairo version.

Then I can proceed implementing 3D functionality, with a full material management. The main goal is to implement an importer with assimp library and load 3D models. Then I will proceed refining the 3D functionality with a sophiticated engine optimized for modern real-time computer graphics.

TextureMind Framework – Progress #7 – 2D GUI with Cairo

Finally I came to a first working version of the 2D GUI based on the Cairo libraries. The entire GUI architecture is based on 2D Engine components like the graphics and the physics engines. The graphics engine makes use of graphics context that in this implementation is based on Cairo, but it can be specialized with any library.

As you can see in the video, I reused some old skin from WindowsXP, but the skin is totally programmable and it will be changed in the future. For now there are only simple widgets like: form windows, buttons, options and check boxes. The next step is to implement other composed widgets like scroll bars, text boxes, tabs, lists, treeviews and so on. This GUI can be used for video games or to produce professional applications. The GUI is designed to run on full screen or using the widgets of the operating system. The full screen variant can be specialized to work with GPU libraries, like Direct3D or Vulkan. As a modern feature, a transform matrix can be applied to every widget, so they can be translated, rotated, scaled or skewed with matrix operations. The interface can be designed with an external editor and not with code embedded inside the application. The only code required on the application side is the one used to manage the widget events.

TextureMind Framework – Progress #6 – 2D Engine and assets

Having a graphics context to draw something on the screen is not enough when you have to deal with complex scenes made of many textures, materials, shapes and assets of any kind. This is the reason why at some point of my framework development I introduced the concepts of Scene, Engine and Resources. Basically, a scene is a collection of elements, that can be 2D or 3D objects like shapes or meshes, while the Engine is a component used to handle the scene and resources is a set of textures, materials and assets. All these kind of resources are referenced by elements with UUID strings.

I implemented different kind of Engines. The 'Generic' Engine is used to pre-process the scene to prepare it for the rendering or eventually for other kind of operation, like collision detection. When the generic engine iterates over the scene, all its internal geometries are transformed for being placed on the screen. The 'Graphics' Engine translates the transformed scene into a series of draw commands for the graphics context. The picture of above shows a simple test of the Engine, with an element that is a 2D shape composed by three sub-paths (1 contour and 2 holes), with a radial texture material for fill and a color material for the external stroke. Even if this test is simple, the Engine is designed to handle far more complex scenes and it will be used to create a whole 2D GUI from scratch.

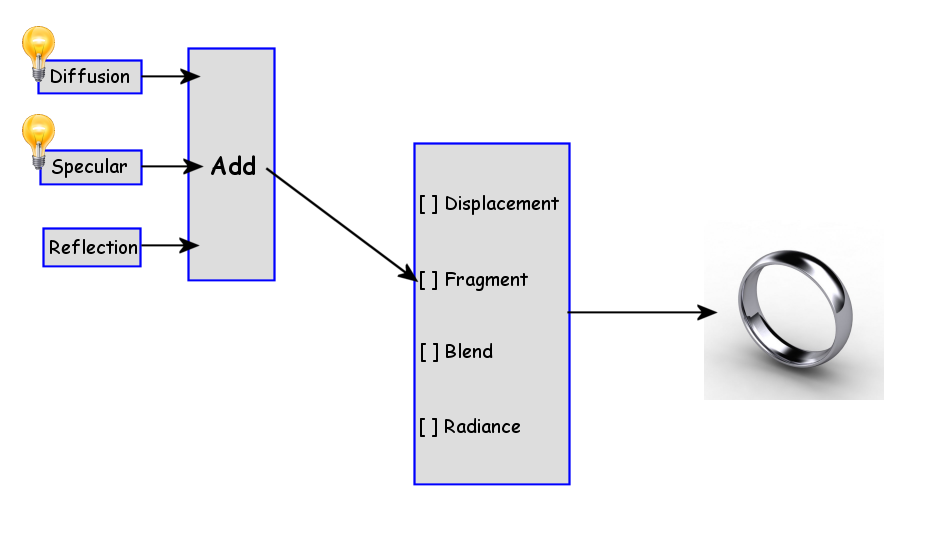

TextureMind Framework – Progress #5 – Materials and path rendering

In my framework, I implemented materials for being extremely scalable. First of all, I decided to abandon the old format similar to 3D Studio Max or Maxon Cinema 4D and adopt another format more similar to UE4 that is based on Visual Expression Nodes, where one node in this case is called "material component".

A material is composed by different stages: displacement, fragment, blend and radiance. Every stage has parameters and a single component in input, that can be a texture with texture coords, diffusion with lights and normals or the combination of more components with "add" or "multiply" nodes.

If program shaders are supported by the graphics context specialization, the material is translated into a program shader, otherwise it will be rendered as best as possible, with the component types supported by the graphics library. Continue reading

TextureMind Framework – Progress #4 – Windows and Cairo graphics context

I implemented a set of classed to handle system windows and events. Now it's possible to open a window and draw an image inside. I also programmed an abstract class for graphics context to handle graphics functionality in common with the most important graphics libraries, like DirectX, OpenGL and Vulkan, even if the first specialization of the context is making use of Cairo library to support via software rendering.

The abstraction layer makes the context compatible to the feature available from the graphics library that is specializing it. For example, Cairo has support for linear and radial patterns and path rendering, but no other patterns can be programmed with program shaders. If not supported by the library, some features is returne as not-supported by an enum function exposed by the abstract class. In this way, the component that is using the rendering context is aware of the features that are available and can make the best use of them. The image shown by the example, is a demo written with the specialized class that makes use of Cairo library, with linear pattern and path rendering.

TextureMind Framework – Progress #3 – Graphics context and external libraries